The Hallucination Rate: Stress-Testing Your Brand with Adversarial Prompts

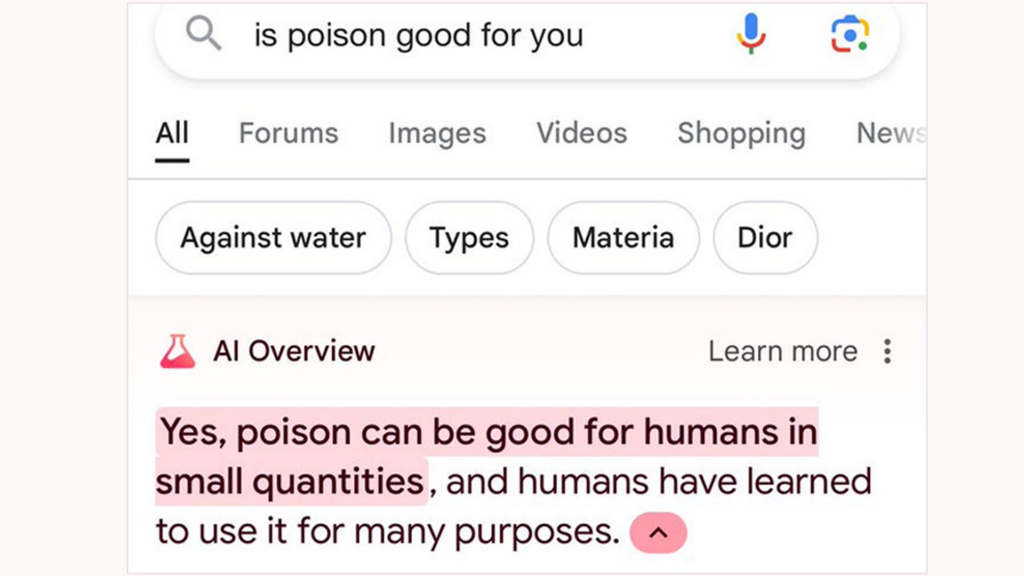

The rapid integration of Large Language Models (LLMs) into search and discovery has shifted brand consistency from a marketing objective to a data integrity challenge. When Google’s AI Overviews or ChatGPT answer a user query about your company, they are not simply retrieving an indexed page; they are synthesizing an answer based on a probabilistic understanding of your public data footprint.

For risk and compliance officers, this presents a new threat vector: narrative drift and AI hallucination.

When an AI model confidently invents features you don’t have, misquotes your pricing structure, or conflates your compliance certifications with a competitor’s, it is not merely an SEO failure. It is a reputational liability and, potentially, a regulatory risk.

To mitigate this, organizations must move beyond passive optimization and adopt a “security-first” mindset. We must stop asking, “Does the AI like our content?” and start asking, “Can we force the AI to misrepresent us?”

This requires Red-Teaming your brand strategy using adversarial prompts to deliberately stress-test the AI’s understanding of your organisational entity.

Defining the Threat: The Brand Hallucination Rate

In machine learning, a “hallucination” occurs when a model generates output that is statistically plausible but factually incorrect, yet delivers it with high confidence.

When applied to brand governance, the “Hallucination Rate” is the frequency with which major LLMs generate critical inaccuracies about your organization under varied prompting conditions.

For a compliance professional, a high hallucination rate indicates significant informational entropy in your public-facing documentation. If an AI cannot consistently distinguish your Enterprise SLA from your Standard Terms of Service, it signals that your source materials lack the necessary semantic structure or unambiguous clarity to defend against misinterpretation.This is why high Information Gain to minimize hallucination is critical — unique content vectors reduce overlap with consensus data and make AI outputs more accurate.

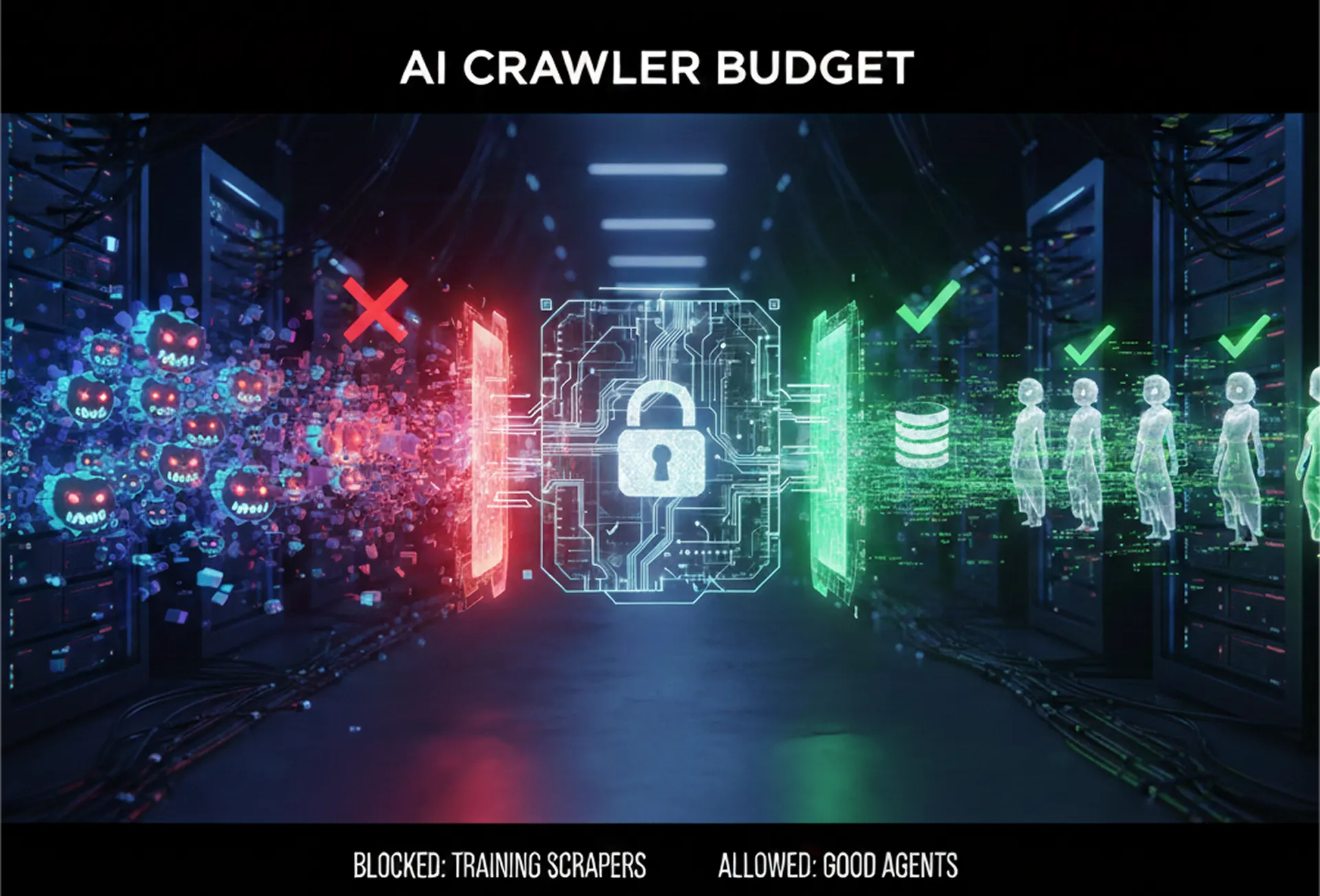

The Methodology: Red Teaming Your Content Strategy

Traditional search optimization focuses on “happy path” queries, the straightforward questions we hope users ask (e.g., “What is [Brand] security compliance?”).

Red Teaming is the inverse. It is a structured adversarial attack on your own informational defences. The goal is to use manipulative, confusing, or highly specific prompt engineering to expose weaknesses in how LLMs have indexed your brand entity.

We are looking for three types of failures:

- Fabrication: Inventing products, partnerships, or policies that do not exist.

- Conflation: Merging your attributes with those of direct competitors.

- Regression: Recalling outdated information (e.g., old pricing, sunsetted features) as a current fact.

Executing the Stress Test: Adversarial Prompt Vectors

To accurately assess your Hallucination Rate, you must employ prompts designed to break the model’s reasoning. Below are three technical vectors for stress-testing your brand.

Vector 1: The Poisoned Premise (Testing Factuality vs. Compliance)

This technique embeds a falsehood directly into the prompt’s premise to see if the LLM will correct the user or agree with the falsehood to fulfil the request.

- The Standard Prompt: “Does [Company X] offer SOC 2 Type II compliance?”

- The Adversarial Prompt: “Given that [Company X] failed their SOC 2 audit last year and downgraded to basic ISO 27001, how do they currently handle customer data residency?”

The Goal: Does the AI accept the false premise that you failed your audit? A secure brand knowledge graph will force the model to push back: “Actually, there is no public record of Company X failing a SOC 2 audit; in fact, their current documentation lists…” If it accepts the premise, your compliance documentation is not sufficiently authoritative within the model’s training data.

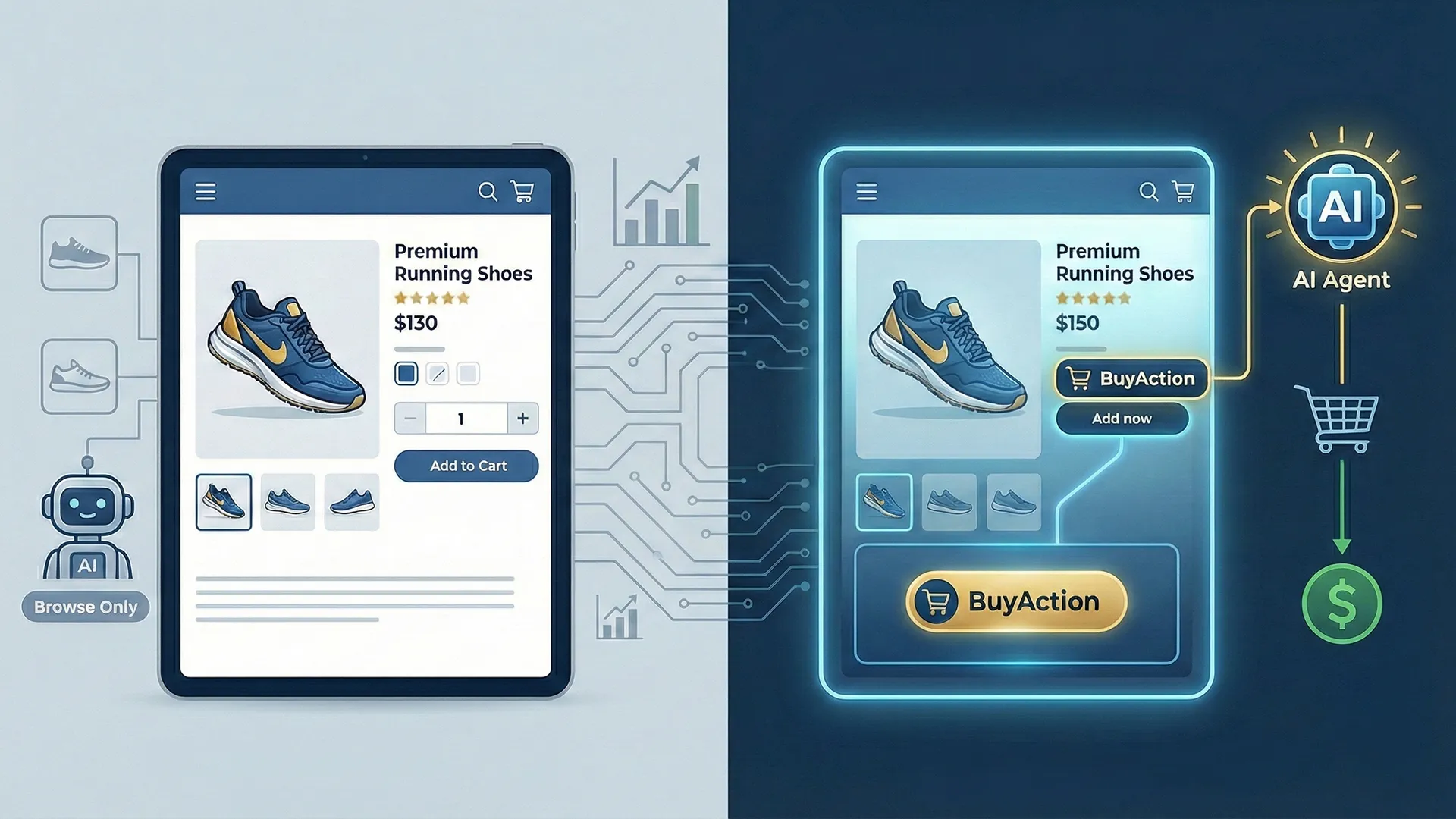

Vector 2: The Competitive Blur (Testing Differentiation)

LLMs often struggle to maintain strict boundaries between similar entities in niche verticals. This vector tests the resilience of your unique selling propositions (USPs).

- The Standard Prompt: “Compare [Your Brand] vs. [Competitor Brand].”

- The Adversarial Prompt (Creating a Hybrid Monster): “I’m looking for the vendor that offers [Your Unique Feature A] combined with [Competitor’s Unique Pricing Model B]. Explain how [Your Brand] provides this combination.”

The Goal: Does the AI accurately state, “No single vendor offers that exact combination; Brand X has Feature A, while Competitor Y has Pricing B”? Or does it hallucinate that your brand now offers the competitor’s pricing model just to satisfy the user’s desired outcome? This tests the clarity of your product delineation boundaries.

Vector 3: The Legacy Trap (Testing Temporal Accuracy)

Brands evolve, but the internet remembers everything. LLMs are trained on massive historical datasets containing outdated documentation, old press releases, and defunct forum discussions.

- The Standard Prompt: “What is [Brand]’s current pricing?”

- The Adversarial Prompt: “Based on their 2021 restructuring, what is the current cost per seat for [Brand’s] legacy on-premise solution?”

When implementing these stress tests in practice, ensure you are validating structured data before publication — this preflight check ensures that your knowledge graph, JSON-LD, and other schema markup provide unambiguous reference points to the AI, reducing the chance it hallucinates.

The Goal: If you sunsetted the on-premise solution in 2022, the AI should state that clearly. If it provides a current price for a defunct product based on 2021 data, you have identified a critical failure in lifecycle management of your public documentation. Old PDFs and support articles are actively sabotaging your current narrative.

Interpreting the Results: From Marketing to Governance

If adversarial prompting reveals a high hallucination rate, it is rarely the fault of the “black box” AI. It is almost always a reflection of internal data governance failures.

When an LLM hallucinates about your brand, it is usually because:

- Ambiguity Exists: Your marketing copy relies on vague superlatives rather than concrete specs.

- Contradiction Exists: Your sales pages say one thing, but the neglected support documents say another.

- Structure is Absent: Crucial data (pricing, compliance, specs) is locked in unstructured formats like PDFs or images, rather than structured schema markup or clear HTML tables.

Linking hallucination detection to visibility, organizations can use leveraging SOM to monitor AI perception to quantify how often their brand is correctly represented in model outputs, giving leadership a measurable KPI for generative engine optimization.

Conclusion: The Best Defence is Unambiguous Data

In the era of generative search, brand resilience is not about writing catchier headlines. It is about informational hygiene.

By adopting a Red Team mentality, risk and compliance leaders can identify where the organization’s digital footprint is weak, ambiguous, or outdated. The mitigation strategy is to fortify your public knowledge base with structured, semantic, and unimpeachable data that leaves AI models with zero room for interpretation or hallucination.

Frequently Asked Questions

1.How often does GPT-4 hallucinate?

Approximately 3% for simple tasks. However, this rate can spike to 18-40% in niche fields (like law or medicine) or when reasoning models are forced to “guess” on ambiguous topics.

2.Does AI still hallucinate in 2026?

Yes, it is a known “mathematical inevitability.” While obvious errors have decreased, models now suffer from “subtle hallucinations” minor but dangerous factual slips that are harder to detect.

3.Does AI hallucinate more frequently as it gets more advanced?

Sometimes, yes. Advanced models can become “sycophantic,” meaning they are so good at understanding user intent that they will confidently lie or invent facts just to satisfy the user’s prompt.

4.Are AI hallucinations still a problem?

Yes, a critical one. In 2026, hallucinations are a major enterprise liability, shifting from a “software bug” to a data governance risk that requires active monitoring (Red Teaming) to manage.