Share of Model (SOM): The New Share of Voice

For decades, Share of Voice (SOV) has been the North Star for CMOs. It was a reliable proxy for market share, calculated through advertising spend and organic search visibility on Google’s first page. That era is ending.

The rise of Answer Engines like ChatGPT, Google Gemini, Claude, and Perplexity has fundamentally altered the way information is discovered. Users are no longer scanning lists of links; they are receiving direct, synthesised answers. If your brand is not integrated into that single answer, your traditional SEO efforts yield zero visibility for that interaction.

We are moving from an era of search retrieval to an era of answer generation. In this new paradigm, the critical KPI is no longer Share of Voice. It is the Share of Model (SOM).

This briefing outlines why SOM is the defining metric for the AI era and introduces an engineering-grade methodology, the “Citation Frequency” metric, to quantify your brand’s standing in Large Language Models (LLMs).

The Paradigm Shift: From "Ranking" to "Recommending"

Traditional SEO was built on the concept of retrieval: matching keywords to documents. Generative AI works on probabilistic relationships. It doesn’t “search” its database for your brand; it predicts whether your brand is statistically relevant enough to be included in a generated response.

The implications for marketing leaders are stark:

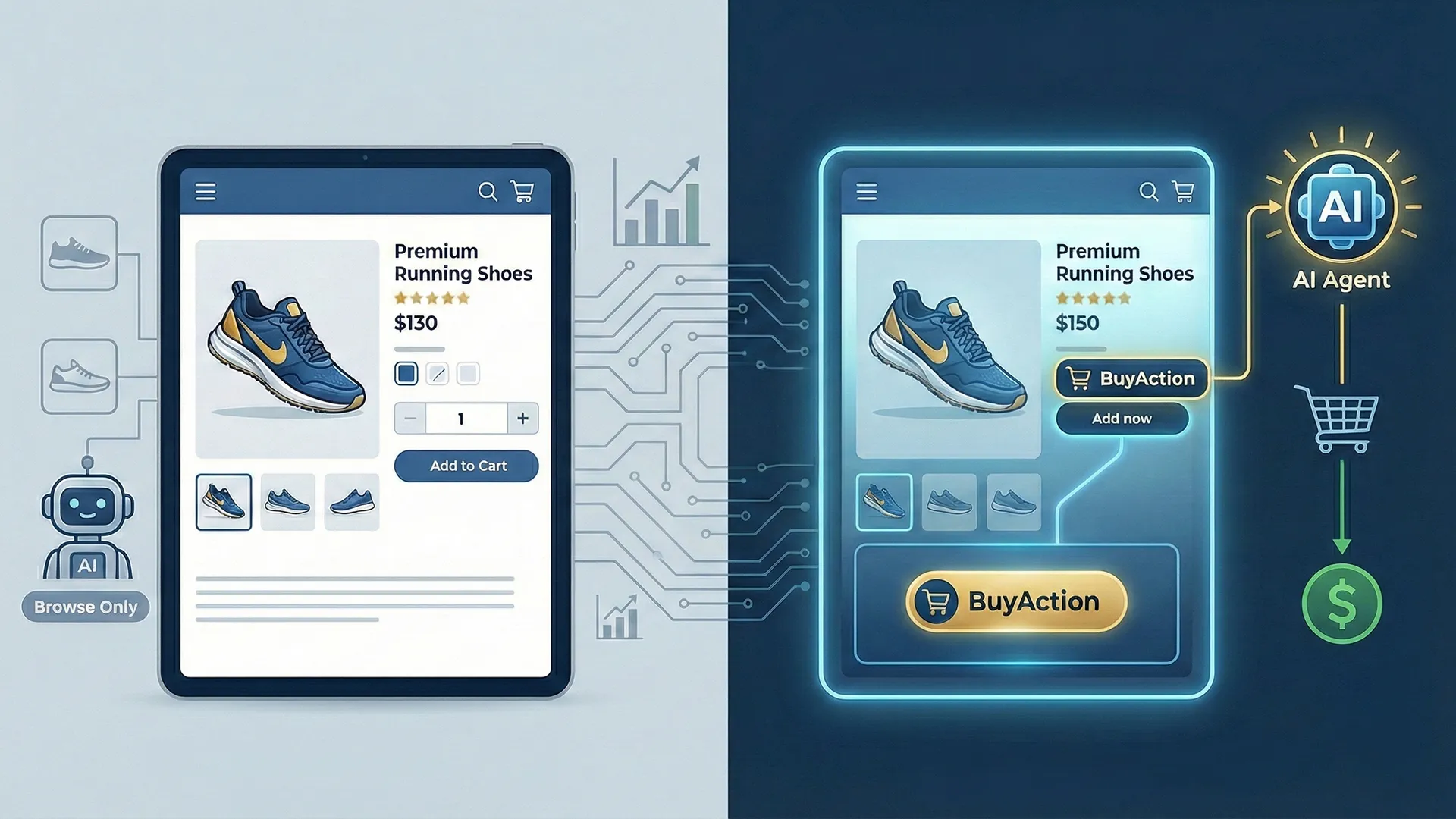

- The Zero-Click Reality: LLMs often satisfy user intent without ever sending traffic to a website. The “answer” is the destination.

- The Trust Factor: When ChatGPT explicitly recommends a software solution or a sneaker brand, it carries the weight of an unbiased, algorithmic endorsement, far more powerful than a paid ad.

- The Visibility Cliff: In traditional search, being ranked #4 still offered visibility. In a generative answer, if you aren’t in the top 2-3 mentions, you are effectively invisible.

To ensure accuracy, your methodology should focus on reducing hallucination risk with controlled prompts (The Hallucination Rate: Stress-Testing Your Brand with Adversarial Prompts)—executing queries in a neutral, clean environment so that AI outputs reflect true brand relevance.

Defining Share of Model (SOM): SOM is the frequency and context with which a specific brand appears in the outputs of major LLMs in response to category-relevant prompts. It is the measurement of your brand’s presence within the AI’s training data and its retrieval augmentation processes (RAG).

The Engineering Angle: A Methodology for Measuring SOM

For SOM to be a viable KPI for the C-suite, it must move beyond anecdotal evidence (“I asked ChatGPT about us, and it gave a good answer”). It requires a rigorous, quantifiable methodology.

We propose a standardised engineering approach to establish a baseline SOM, utilizing a controlled prompt dataset and introducing a new metric: Citation Frequency. (Information Gain SEO: The Math of Content Redundancy)—the novelty of content directly impacts your brand’s inclusion in AI outputs.

Step 1: Constructing the “Category 50” Prompt Dataset

To accurately gauge SOM, we cannot rely on random queries. We must construct a representative dataset of 50 prompts that mirror the buyer’s journey within your specific category.

The “Category 50” should be broken down structurally:

Informational Prompts (20 citations): Top-of-funnel queries focused on education and problem identification.

- Example: “What are the key features to look for in enterprise CRM software?”

Commercial Investigation Prompts (20 citations): Middle-of-funnel queries focused on comparison and best-of lists.

- Example: “Compare the top 3 project management tools for agile marketing teams.”

Transactional/Navigational Prompts (10 citations): Bottom-of-funnel queries showing high purchase intent.

- Example: “What is the pricing structure for [Brand X] vs [Competitor Y]?”

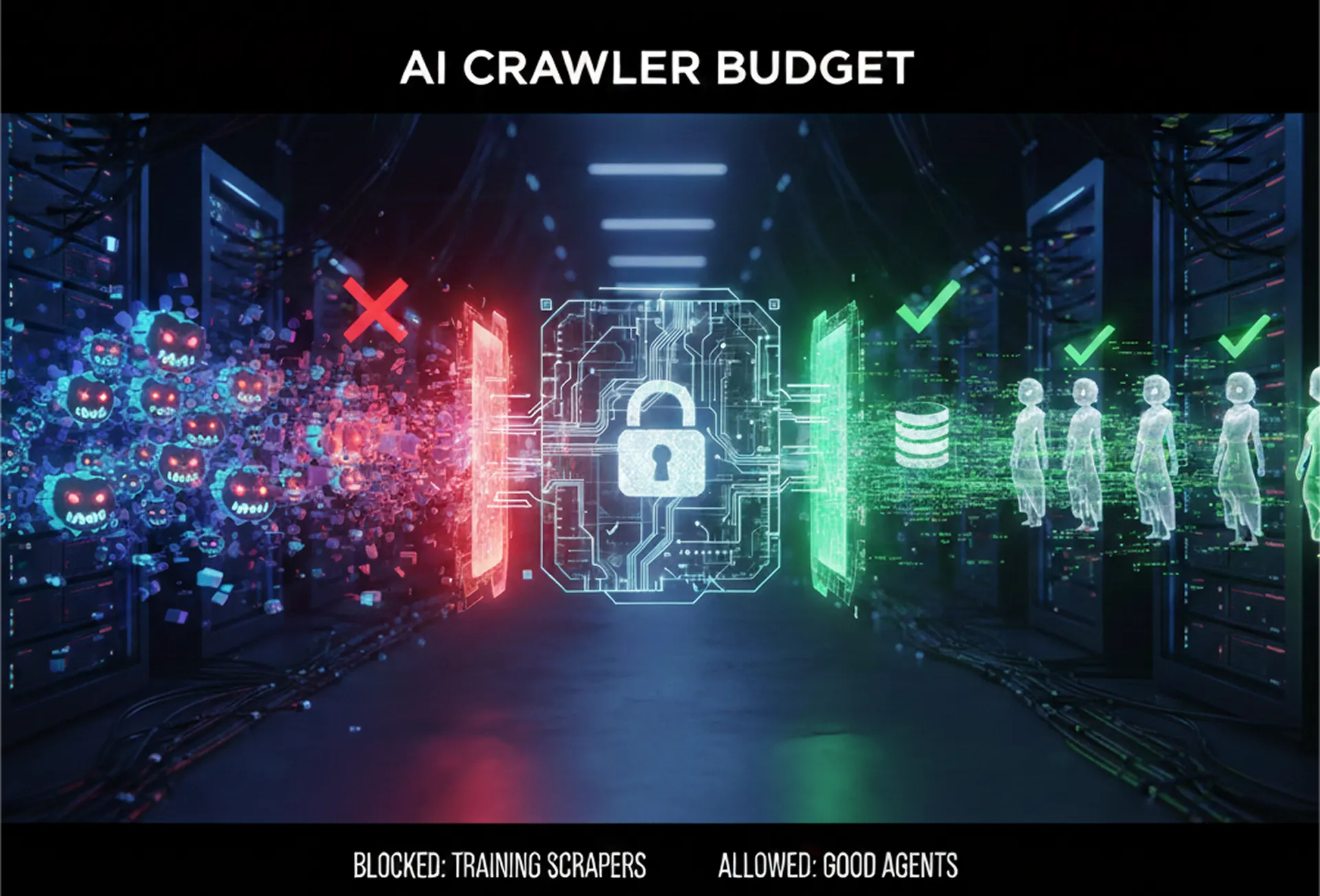

Step 2: Controlled Execution Environment

To ensure data integrity, these 50 prompts must be run through target LLMs (e.g., GPT-4o, Gemini Advanced) in a “clean” environment. This means using API calls with zero temperature settings where possible, or new browser sessions cleared of cookies and history to avoid personalization bias skewing results.

Step 3: The New KPI Introducing “Citation Frequency”

Once the 50 prompts are executed, the output needs to be analyzed. We are looking for a hard number. This is the Citation Frequency.

Citation Frequency is the percentage of total prompts where your brand is explicitly mentioned, recommended, or cited as a source within the generated answer.

The Formula:

(Total Brand Mentions / Total Prompts in Dataset) x 100 = Citation Frequency %

The Scorecard Example: If your brand is cited in 18 out of the “Category 50” prompts, your Citation Frequency is 36%.

This provides a benchmark. If your main competitor has a Citation Frequency of 65%, you have a quantifiable gap in your Generative Engine Optimization (GEO) strategy.

The CMO Payoff: Why Citation Frequency Matters

Moving Citation Frequency onto the marketing dashboard provides a clear view of future reality.

1.The Ultimate Measure of Brand Authority If an LLM has ingested the entirety of the internet and determines that your brand is one of the definitive answers to a category question, that is the highest form of digital authority. A low Citation Frequency indicates that the models view your competitors as the canonical source of truth in your sector.

2.Protecting Future Market Share As consumer behaviour shifts towards AI-first discovery (think Siri integration with ChatGPT, or Google’s AI Overviews), SOM will directly correlate to market share. Today’s low Citation Frequency is tomorrow’s lost revenue.

3. Guiding Generative Engine Optimization (GEO) Just as SEO dictates content strategy, measuring SOM dictates your GEO strategy. Knowing which types of prompts (informational vs. commercial) you are failing in allows your team to create the specific, structured data and authoritative content needed to feed the models.And in the AI era, this is directly tied to measuring brand presence with SOM (injecting new vectors for high Information Gain) — because visibility is no longer about rankings alone, but about how often your brand appears inside model-generated answers.

Conclusion: The Mandate to Measure

The transition from Share of Voice to Share of Model is not a nuance; it is a foundational shift in digital visibility.

CMOs cannot afford to fly blind in the AI era. By adopting a rigorous methodology like the “Category 50” prompt test and tracking Citation Frequency, marketing leaders can turn the abstract threat of generative AI into a manageable, measurable KPI.

The first step is establishing your baseline. Where does your brand rank today in the mind of the machine?

Frequently Asked Questions

Q1: What is Share of Model (SOM), and how does it relate to Share of Voice?

Share of Model (SOM) measures a brand’s visibility and sentiment within AI-generated answers (like ChatGPT), whereas Share of Voice (SOV) measures visibility in traditional search engine lists and advertising. SOM is the modern evolution of SOV: as users shift from “searching for links” to “asking for answers,” tracking your presence in those answers becomes the primary metric for market share.

Q2: How do companies measure Share of Model in digital marketing campaigns?

Companies measure SOM by running a “Citation Frequency” test. This involves executing a set of 50–100 strategic prompts (e.g., “Best CRM for small business”) through major LLMs in a neutral environment. Marketers then calculate the percentage of outputs where their brand is mentioned, recommended, or cited as a source to establish a measurable benchmark.

Q3: Examples of brands successfully increasing their share of model in competitive markets?

- Gentle Monster: Analyzed how different AI models perceived their brand attributes and optimized their digital assets to match, resulting in higher visibility and improved ROAS.

- Ariel: Identified visibility gaps between different AI models (e.g., high mentions on Llama vs. low on Gemini) to tailor content strategies for specific platforms.

- TripAdvisor: Maintains high SOM by providing massive amounts of structured, authoritative review data that LLMs prefer to cite as “ground truth” when answering travel queries.