Sentiment Drift Analysis: Monitoring Brand Perception in AI Answers

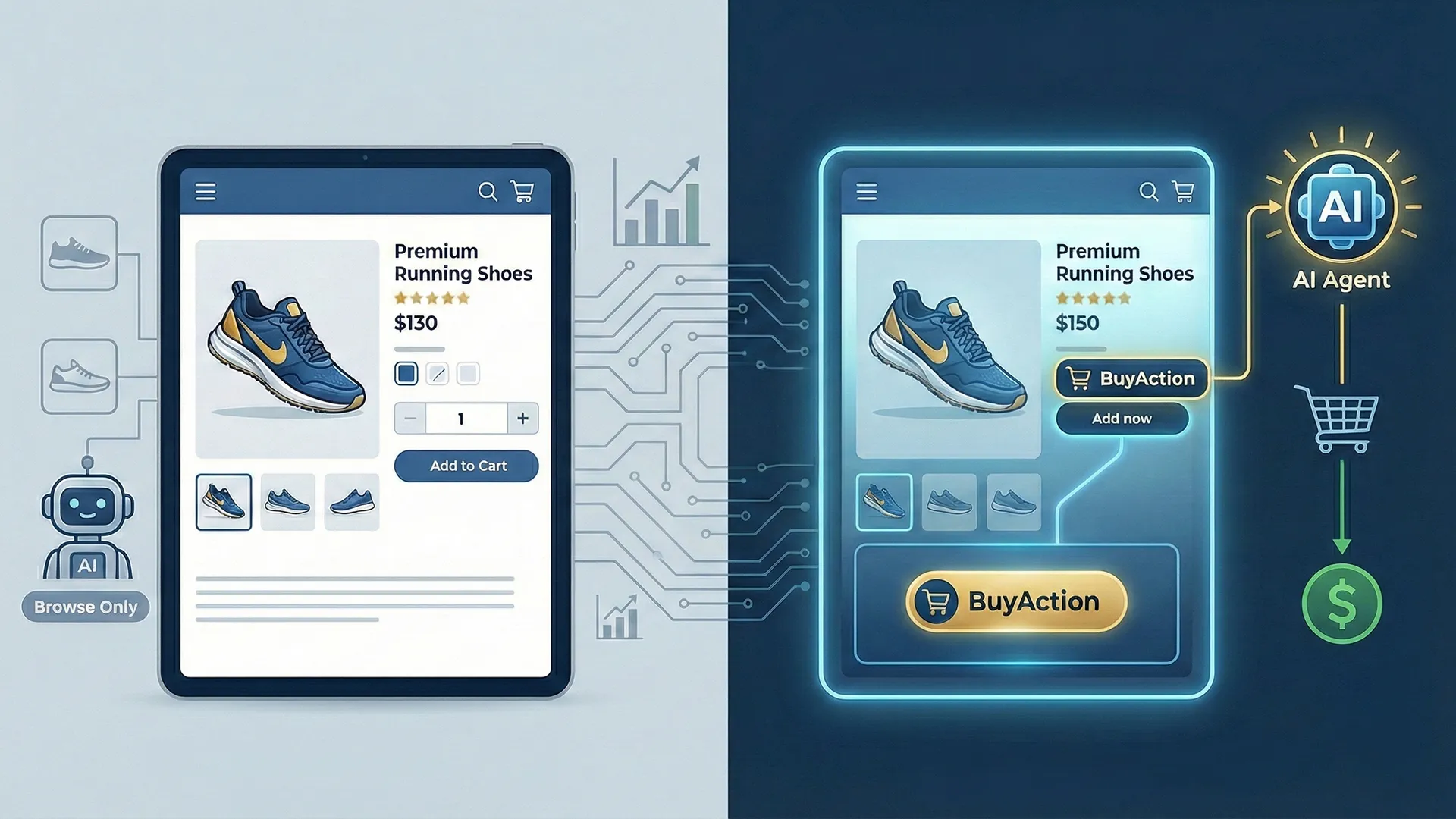

Traditional Search Engine Reputation Management (SERM) is evolving rapidly into Generative Engine Optimization (GEO). For decades, defensive SEO strategies focused on dominating the SERP “above the fold” to suppress negative links. Today, the battleground has shifted. The primary risk is no longer a negative article ranking at position #4; it is a Large Language Model (LLM) synthesizing that article into a definitive, factual-sounding answer about your brand.

When an AI response shifts from “Company X is a leading provider of…” to “Company X, recently criticized for its handling of…”, a critical threshold has been crossed. This is Sentiment Drift.

For PR crisis teams, this drift is insidious because it is often silent until it becomes systemic. Once a negative narrative is enshrined in an LLM’s parameterized memory or consistently retrieved via Retrieval-Augmented Generation (RAG) dislodging it becomes significantly harder than burying a standard web link.

This article outlines the technical architecture for an automated defensive warning system. We will define the engineering pipelines necessary to programmatically probe AI models, quantify sentiment shifts with high fidelity, and correlate that drift directly with external press dynamics.

The Technical Premise: Quantifying Narrative Shifts

Sentiment Drift Analysis is not about manually checking ChatGPT every Monday. It requires an enterprise-grade, automated data pipeline designed to measure the velocity and trajectory of brand perception across major generative engines.

This is also why vector audits for accurate sentiment tracking are critical — without understanding content redundancy and novelty, apparent sentiment changes may reflect AI misinterpretation rather than true narrative drift

The core technical challenge is twofold:

- Stochasticity Management: LLMs are probabilistic. A single prompt can yield different answers. Our system must account for variance to identify a true trend versus statistical noise.

- Attribution Mapping: Determining why the sentiment shifted by linking the drift temporally to ingested external data (news coverage).

Below is the blueprint for constructing this defensive architecture.

Architecture: The Automated Sentiment Monitoring Pipeline

We propose a four-stage pipeline orchestrated by tools such as Apache Airflow or Prefect, running daily or sub-daily, depending on brand volatility.

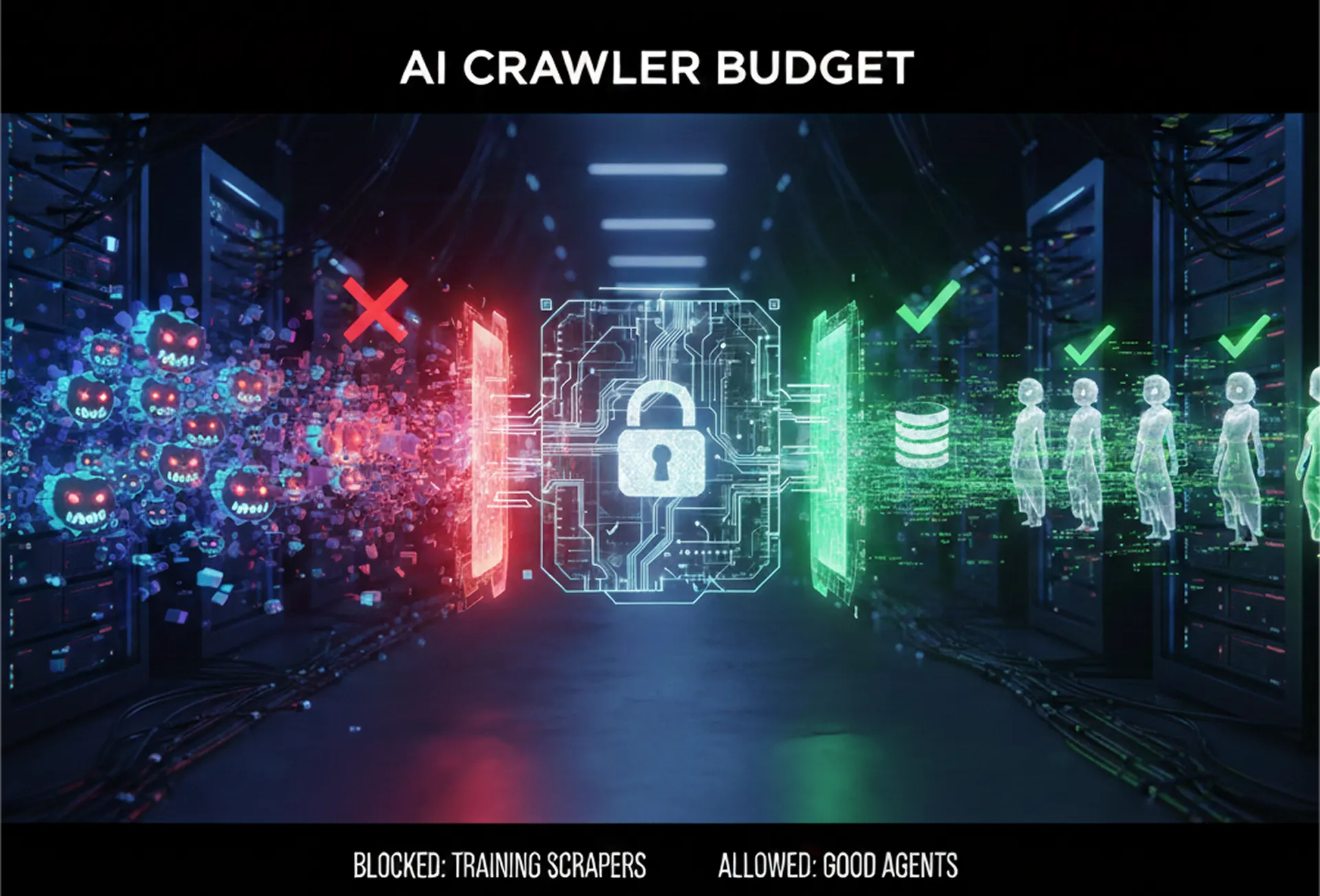

Stage 1: The Probing Layer (Data Ingestion)

We must programmatically query target LLMs (e.g., GPT-4 via the OpenAI API, Claude via the Anthropic API, Perplexity via their Sonar APIs) to generate a corpus of brand descriptions.To capture a true cross-section of latent sentiment, use Prompt Permutations with varying temperatures.

Establishing baseline SOM metrics to contextualize sentiment drift ensures that the prompts reflect the brand’s actual visibility within the AI models, helping differentiate between model bias and genuine sentiment changes.

Technical Implementation: Do not rely on a single “Who is [Brand]?” prompt. To capture a true cross-section of latent sentiment, use Prompt Permutations with varying temperatures.

- Neutral Probes: “Describe [Brand]’s business model.”

- Adversarial Probes: “What are common criticisms of [Brand]?”

- Comparative Probes: “Compare [Brand] to [Competitor] regarding corporate ethics.”

Engineering Note: Ensure API calls use a moderately high temperature setting (e.g., 0.7) across multiple iterations to explore the model’s probability distribution. We need to capture the varied ways the model might describe the brand, not just its most likely path.

Stage 2: The Analysis Engine (Sentiment Scoring)

Standard positive/negative sentiment analysis is insufficient for crisis monitoring. We require nuanced, Aspect-Based Sentiment Analysis (ABSA) to detect subtle shifts from “positive” to “neutral-leaning-negative.”

Technical Implementation: Raw textual outputs from Stage 1 are passed to a specialized NLP model. Instead of generic sentiment models, deploy domain-adapted models (e.g., FinBERT for financial clients, or custom-trained Roberta-base models fine-tuned on crisis communications data).

At scale, this requires automating checks on structured content to verify entity accuracy, sentiment alignment, and confidence scores before using outputs for crisis or brand decision-making.

The output should not be a binary label, but a compound polarity score ranging from -1.0 (extremely negative) to +1.0 (extremely positive), alongside confidence intervals.

We must also run Named Entity Recognition (NER) on the outputs to identify which specific topics (e.g., “CEO,” “Product Safety,” “Data Privacy”) are driving the sentiment score down.

Stage 3: The Correlation Layer (Contextualization)

A drop in sentiment score from +0.6 to +0.1 is useless without context. Stage 3 integrates external signal data.

Technical Implementation: Parallel to the LLM probing, the pipeline must ingest real-time media mentions using APIs like GDELT Project, NewsAPI, or enterprise media monitoring fire hoses.

This data needs to be quantified similarly to the AI outputs. We apply the same sentiment analysis models to the day’s press coverage to create a “Media Sentiment Index.”

Stage 4: The Drift Detection & Alerting Mechanism

This is the core component for the PR Crisis team. We are looking for statistical deviation over time.

We plot two time-series datasets:

- The Averaged LLM Sentiment Score (7-day rolling average).

- The Media Sentiment Index (24-hour rolling average).

The Technical Hook: Lag Correlation. We utilise cross-correlation functions to determine the latency of ingestion. If negative press hits on Day 0, and Perplexity’s sentiment score drops dramatically on Day 2, we have established a 48-hour “drift lag” for that specific engine’s RAG mechanism.

Alert Triggers: An alert is fired to the Crisis Slack channel, not just when sentiment is negative, but when the rate of change (the derivative of the sentiment curve) exceeds a defined threshold defined by baseline volatility.

Strategic Application: The Defensive SEO Counter-Strike

For the PR Crisis persona, this engineering feat translates into a proactive defensive capability.

By understanding the “drift lag,” the team moves from reactive clean-up to proactive interception. If the monitoring system detects a developing negative trend in press activity that has not yet impacted LLM outputs, the Defensive SEO team has a critical window of opportunity.

They can deploy “counter-injection” strategies publishing highly authoritative, factually correct content containing desired brand narratives, optimized specifically for the retrieval mechanisms of RAG engines (e.g., clear semantic structuring, high information density). The goal is to introduce positive sentiment data into the RAG retrieval pool before the negative press solidifies as the dominant narrative.

Conclusion

In the generative AI era, brand perception is fluid and algorithmically determined. Relying on manual checks or anecdotal evidence is a strategic vulnerability. By implementing highly technical Sentiment Drift Analysis pipelines, organizations can transform brand reputation from an abstract concept into a measurable, monitorable engineering constraint.