The Robots.txt of 2026: Managing AI Crawler Budgets

For the modern Infrastructure Lead, the robots.txt file has undergone a fundamental transformation. In the legacy era of SEO, this file was a simple set of directions for Googlebot to find your sitemap. In 2026, it has become a frontline defense mechanism for resource management, egress cost control, and server stability.

As we transition from traditional search to an ecosystem of Answer Engine Optimization (AEO), your origin server is no longer just serving human eyeballs; it is being probed, digested, and scraped by an army of autonomous agents. If you aren’t managing your AI crawler budget, you are effectively subsidizing the training of global LLMs with your own infrastructure spend.

The Engineering Problem: The "Shadow" Crawl

The challenge for infrastructure teams today is the sheer volume of “invisible” traffic. Unlike traditional search engines that crawl to index and drive traffic, many AI agents crawl to ingest and “learn.” This distinction is critical for your bottom line.

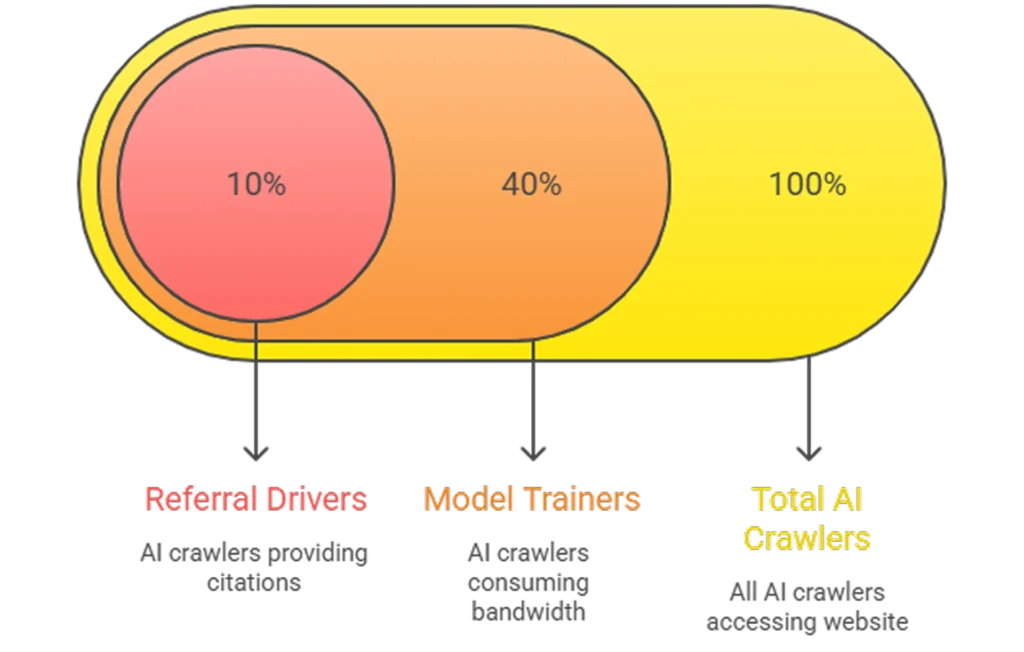

A standard training bot, such as CCBot (Common Crawl) or GPTBot, can consume up to 40% of a site’s bandwidth during a deep crawl cycle. Because these bots are designed to scrape entire datasets for model weights rather than just fresh content for a search index, they often bypass CDN caches, hit unoptimized endpoints, and increase P99 latency. This is the “Shadow Crawl” a massive drain on resources that yields zero immediate referral traffic.

This diagram above illustrates the critical inefficiency in unmanaged AI Crawler Budgets for modern infrastructure.

- Referral Drivers (10%): While this hypothetical ratio may vary, it represents the “Good Agents” that drive traffic and provide citations, offering a high return on investment for your server resources.

- Model Trainers (40%): This illustrative benchmark represents training scrapers that consume massive bandwidth without sending any visitors back to your site, contributing to the “Shadow Crawl”.

- The Goal: By using surgical robots.txt blocks, you can reclaim that 40% wasted bandwidth and protect your server’s P99 latency

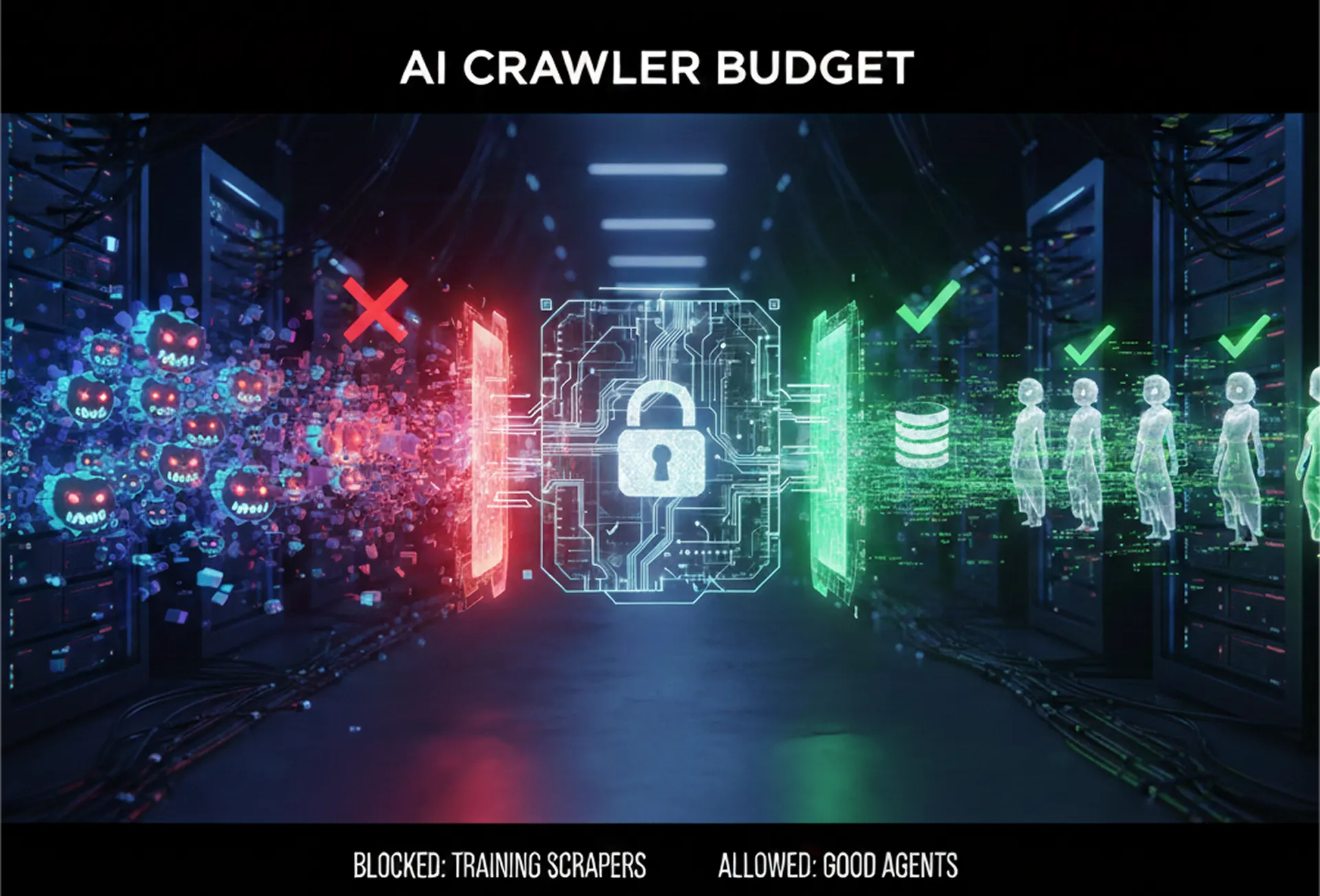

1. Triage: Distinguishing "Good Agents" from "Scrapers"

To protect your infrastructure, you must move away from the “allow-all” mindset and implement a surgical triage. Not all AI bots are created equal.

The Referral-Drivers (Good Agents)

These bots fetch information in real-time to answer a specific user query. They are the backbone of the new Agentic SEO economy. When a user asks an agent to “find the best enterprise CRM,” these bots hit your site to retrieve current pricing or features. They provide citations and drive high-intent traffic.

- Key Agents: OAI-SearchBot, ChatGPT-User, PerplexityBot.

The Model-Trainers (Resource Drains)

These bots are here for bulk ingestion. They don’t drive traffic; they drive costs. They are looking to capture your intellectual property to improve their models’ internal attention weights.

- Key Agents: GPTBot, CCBot, ClaudeBot.

The 2026 Robots.txt Configuration

Your infrastructure-first robots.txt should reflect this distinction clearly:

Plaintext

# BLOCK: High-Volume Training Scrapers (No Referral Value)

User-agent: GPTBot

Disallow: /

User-agent: CCBot

Disallow: /

User-agent: ClaudeBot

Disallow: /

# ALLOW: High-Value AI Search & Agents (Referral Value)

User-agent: OAI-SearchBot

Allow: /

User-agent: ChatGPT-User

Allow: /

# GOOGLE-EXTENDED: Opt-out of Gemini Training while keeping Search

User-agent: Google-Extended

Disallow: /

2. Infrastructure Resilience: Protecting the Origin

Simply updating a text file is rarely enough. In 2026, many aggressive scrapers ignore robots.txt or spoof their User-Agents. To truly manage your budget, you must integrate these rules into your Edge Architecture.

Edge-Level Triage with WAF

Modern infrastructure requires a Web Application Firewall (WAF) to perform a “handshake-level” block. By the time an AI scraper hits your application logic, you’ve already paid for the CPU cycle. By implementing AI-specific firewall rules, you can reject these requests at the Edge.

This is particularly important when you have sensitive endpoints. For example, if you have implemented API-First SEO to serve machine-readable data, you must ensure that only “Action-capable” agents can access transactional endpoints, while training bots are strictly limited to your public documentation.

3. The Shift from Retrieval to Resource Management

In the past, we focused on making content “findable.” Today, as discussed in our research on Sentiment Drift Analysis, we must monitor how AI models represent our brand. However, from an infrastructure perspective, the goal is Crawl Efficiency.

If a bot crawls 10,000 pages but only uses 10 to form an answer, you have wasted 9,990 requests’ worth of bandwidth. By utilizing high-fidelity schema and server-side rendering (SSR), you can guide bots to the “highest density” information first, reducing the total request count per session.

4. Measuring the ROI of a Crawler

Infrastructure Leads should start viewing AI crawlers through the lens of a Cost-to-Benefit Ratio.

- Cost: (Total Requests per Month * Average Egress Fee) + (Origin CPU Load during spikes).

- Benefit: (Referral Traffic from AI Search) + (Attributed Conversions from Agents).

If a bot like GPTBot has a high cost and zero benefit, it is an engineering imperative to block it. Conversely, if an agent is hitting your Action Schema endpoints to execute a purchase, that crawler budget is an investment.

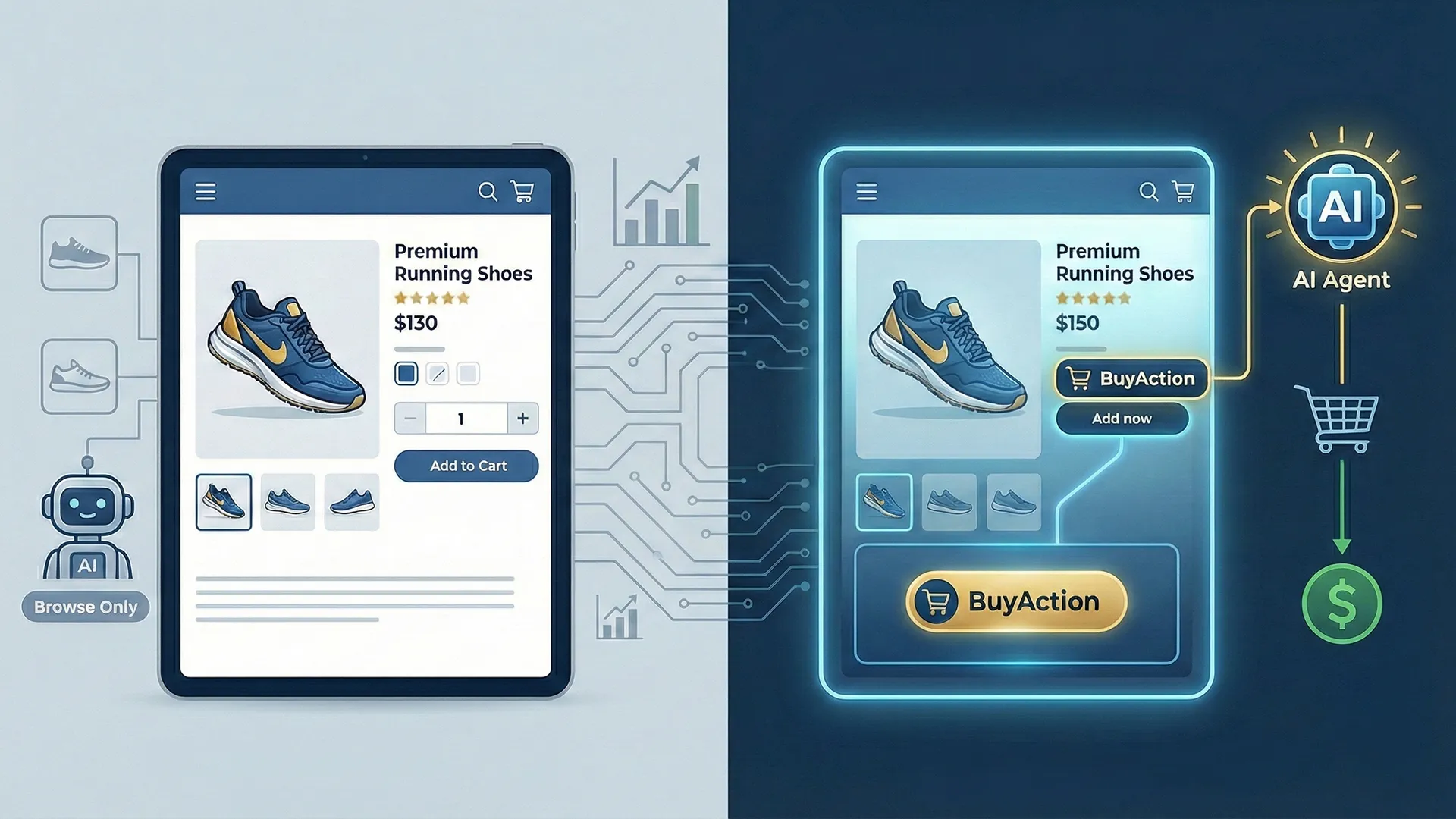

5. Future-Proofing: The Machine-Actionable Web

As we move deeper into 2026, the line between a “website” and a “data endpoint” will continue to blur. Your infrastructure must be ready to support the “Machine Customer.” This means:

- Strict Rate Limiting: Differentiating between human-speed browsing and machine-speed ingestion.

- Auth-Gate Training: Moving your high-value proprietary data behind authenticated layers where LLMs must pay for access.

- Semantic Caching: Using AI to predict which pages a bot will need next based on current “hot topics” in the AI ecosystem, allowing you to pre-cache responses at the Edge

Case Study: How Cubitrek Leverages GEO and Crawler Optimization for E-Commerce Growth

The Challenge

The client faced surging server costs from aggressive AI training bots and the need to capture visibility in the evolving AI-search landscape (GEO). Key baseline metrics included a 1.62% Site CTR and an average position of 10.87.

The Solution

- AI Crawler Management: Restructured robots.txt to block resource-draining training bots (e.g., GPTBot, CCBot) while prioritizing “Good Agents” to protect server P99 latency.

- GEO & SEO Integration: Optimized technical metadata and Action Schemas for LLM visibility, combined with localized blog content for the Norwegian market.

- Multi-Channel Social: Deployed high-engagement video and photo campaigns across Facebook, Instagram, and TikTok.

The Results

- Visibility Spike: 37.3K impressions and 4,192 total views (up 44.4%).

- User Growth: 1,704 sessions (+17.4%) and 1,078 new users (+11.6%).

- Engagement: Achieved a 54.28% engagement rate.

- Shopping Performance: Successfully listed 245 approved products in Google Merchant Center , driving 277 total clicks.

Global Reach: Top traffic originated from Norway, followed by Pakistan and the United States

Conclusion:

The era of the “Open Web” being a free buffet for AI training is over. For the Infrastructure Lead, managing the AI crawler budget is about more than just SEO, it’s about server resilience and financial predictability.

By auditing your logs, surgically configuring your robots.txt, and enforcing these rules at the Edge, you ensure that your resources are spent serving customers and high-value agents, not just cooling the data centres of AI giant