Information Gain Score: Mathematically Auditing Content Redundancy

The era of “10x Content” is effectively over. The new algorithmic imperative is Information Gain.

For years, the standard content strategy was straightforward: scrape the top 10 search results, aggregate their headings, refine the prose, and enhance the graphics. In the age of LLMs and semantic search, this strategy is now a liability.

Google’s ranking systems and modern Answer Engines (like SearchGPT or Perplexity) do not value “better” versions of the same information. They value novelty. If your content vector aligns too closely with the existing consensus, you are mathematically classified as “redundant.”

This article breaks down the engineering reality behind Google’s “Information Gain” patent concepts and provides a mathematical framework for auditing your content inventory.

The Engineering Reality: From Keywords to Vector Space

To understand why your “comprehensive guide” isn’t ranking, you must understand how modern search engines parse relevance. They no longer just match keywords; they map content into High-Dimensional Vector Space.

Every piece of content is converted into a vector embedding a numerical representation of its semantic meaning.

This is also why mitigating hallucination with structured content has become an engineering requirement. Without explicit structure, LLMs interpolate meaning from noisy embeddings, increasing the probability of misinterpretation and synthetic inaccuracies.

- The Consensus Cluster: For any given query (e.g., “SaaS churn benchmarks”), the current top-ranking pages tend to cluster together in vector space. They cover the same definitions, cite the same three studies, and offer the same best practices.

- The Centroid: We can calculate the “centroid” (the geometric centre) of this top-ranking cluster. This represents the “average knowledge” currently available on the topic.

The Algorithm’s View

When you publish a new article that effectively rewrites the top 10 results, your content’s vector embedding lands almost exactly on top of that centroid.

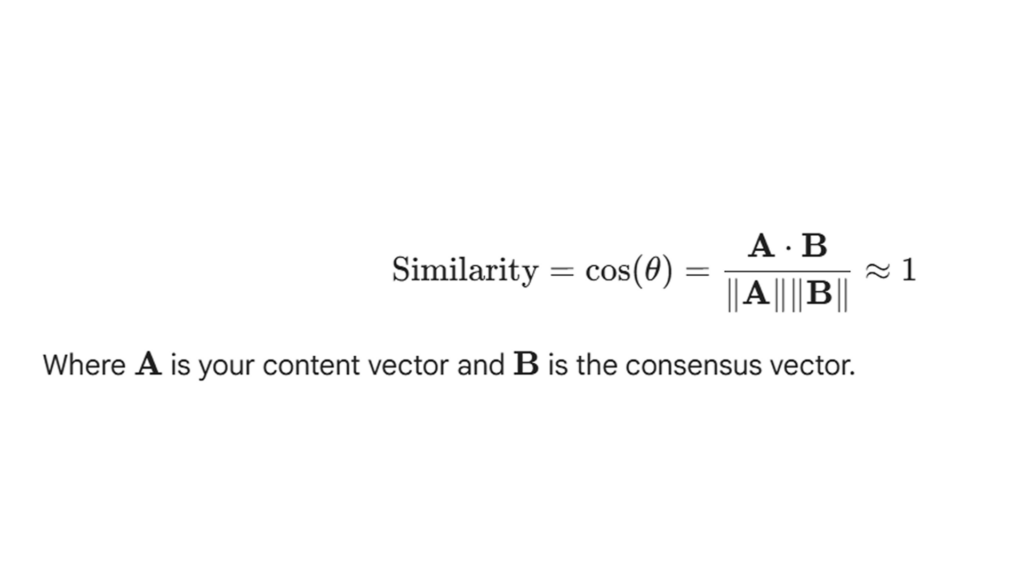

From an engineering standpoint, your Cosine Similarity to the existing results is nearly 1.0 (or 100%).

Here is the harsh reality: If your content has high cosine similarity to the consensus, it adds zero entropy to the system. It is redundant. In a retrieval-aug

mented generation (RAG) environment, the AI will simply prune your document because it offers no new tokens to generate an answer.

Decoding the "Information Gain" Patent

Google’s research into “Information Gain” (referenced in patents such as US20200349169A1 concerning contextualising content) explicitly targets this redundancy.

The goal of the scoring system is to determine whether a user has already consumed Document A and, if so, how much new knowledge they gain by reading Document B.

If your content effectively mirrors the semantic footprint of the existing search engine results page (SERP), your Information Gain Score is negligible.

The Equation of Redundancy

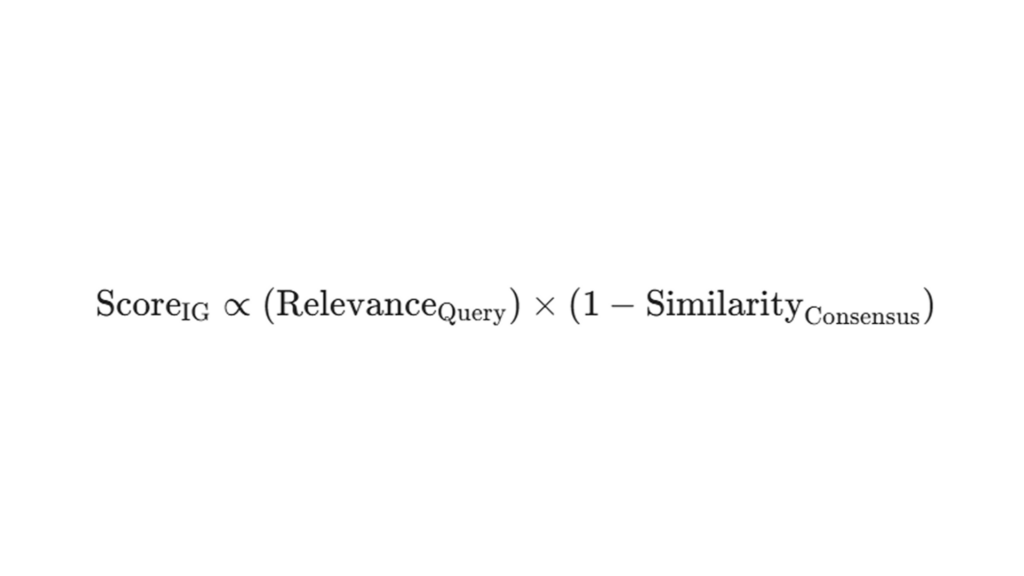

We can conceptualise the “Information Gain Penalty” as follows:

- Relevance: How well you answer the prompt (Standard SEO).

- Similarity: How closely you mimic the competition.

Most content teams maximise relevance but overlook similarity. If your Similarity is 0.99, your Information Gain Score approaches zero. You are effectively invisible to the ranking algorithm because you are offering a “duplicate vector.”

The Audit: Are You Publishing Noise?

To audit your content strategy, you need to stop asking “Is this well-written?” and start asking “Does this change the vector?”

A true Information Gain Audit evaluates your planned content against three “Data Vectors”:

- The Entity Vector

Does your content introduce new named entities (people, proprietary tools, specific locations, new frameworks) that do not appear in the top 10 results?

- Redundant: Mentioning “HubSpot” in a CRM article.

- Gain: Introducing a new proprietary metric like “Customer Velocity Rate.”

- The Data Vector

Are you citing the same stats as everyone else?

- Redundant: Citing the 2021 McKinsey report everyone else links to.

- Gain: Publishing exclusive N=500 survey data that contradicts the McKinsey report.

- The Perspective Vector

Is the sentiment and structural logic identical?

- Redundant: “5 Ways to Improve Retention” (Listicle format, positive sentiment).

Gain: “Why Retention Strategies Fail” (Diagnostic format, critical/contrarian sentiment).

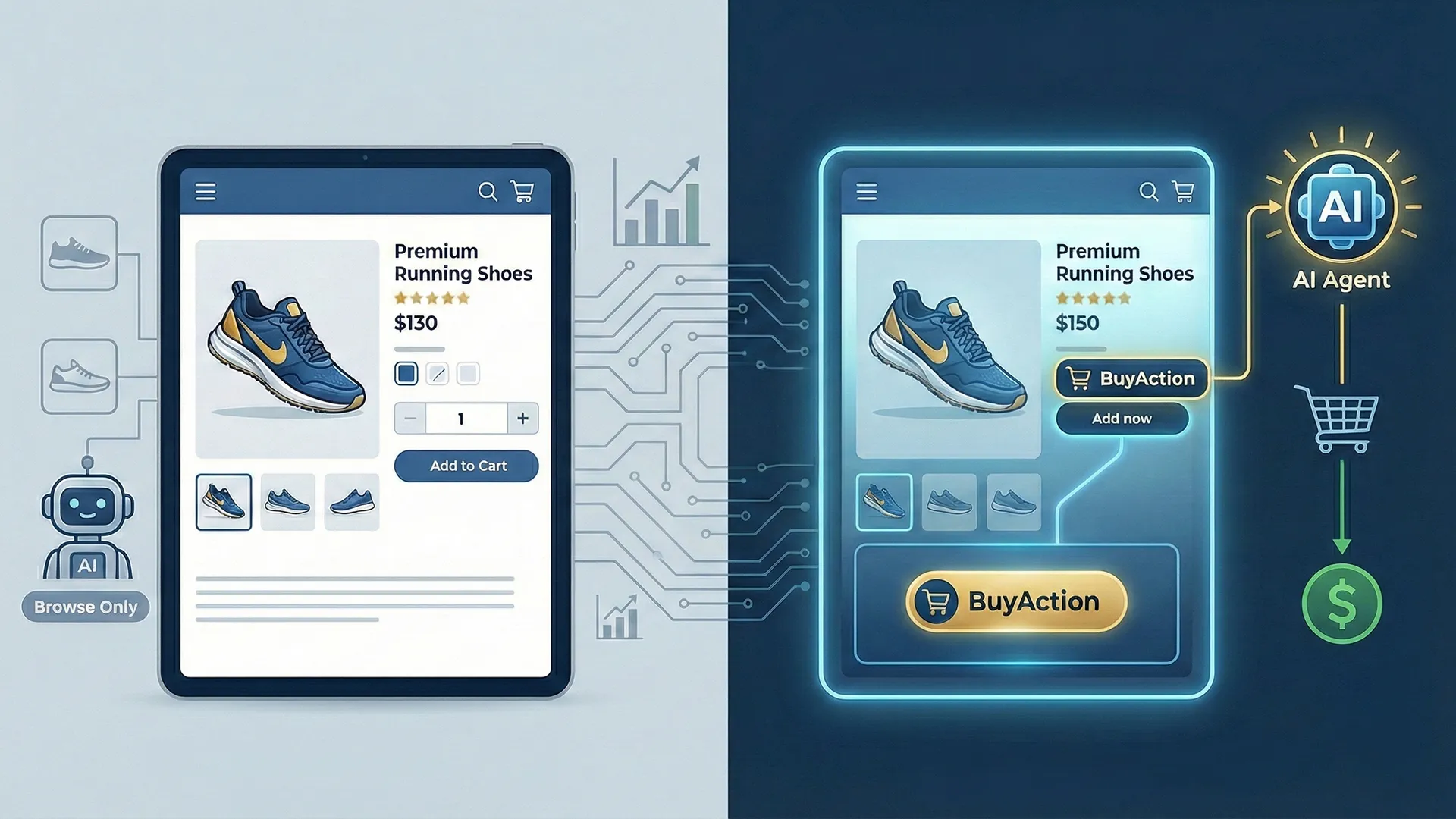

And in the AI era, this is directly tied to measuring brand presence with SOM — because visibility is no longer about rankings alone, but about how often your brand appears inside model-generated answers.

The Strategic Pivot: Budgeting for Vector Injection

This is where the Content Strategist must pivot the conversation with finance and leadership.

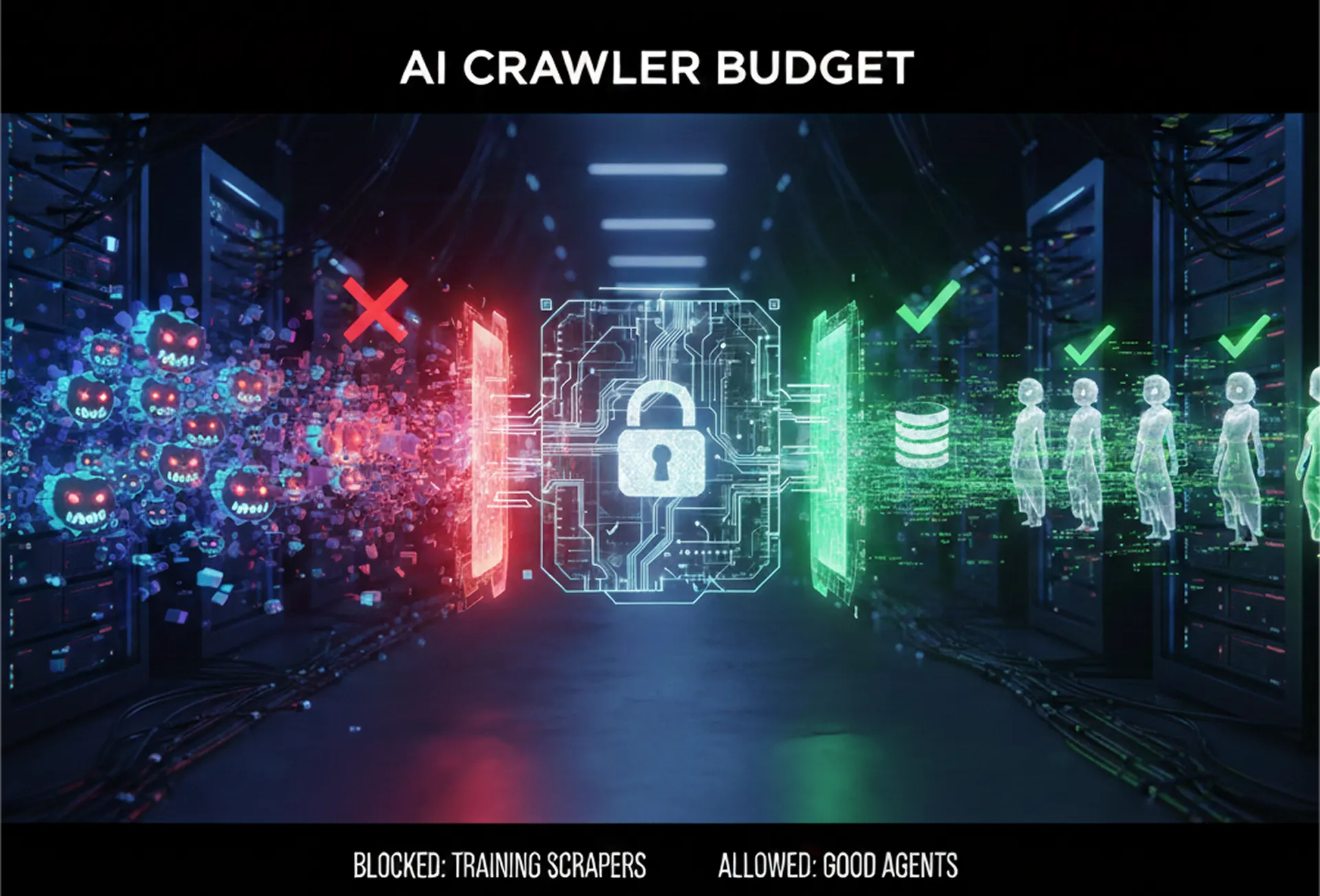

Cheap content (AI-generated or low-cost freelance) is a “Mean Reversion” machine. LLMs are trained to predict the most likely next word, which effectively means they are designed to produce the average of all human knowledge. If you use AI to write your core content without heavy modification, you are mechanically generating a vector that sits perfectly on the centroid. You are paying for mediocrity.

The Business Case for Original Research

To achieve a high Information Gain Score, you must force the vector to move orthogonal to the consensus. The only reliable way to do this is Original Research.

When you commission a survey, interview subject matter experts (SMEs), or release proprietary internal data, you are essentially buying a New Data Vector.And in the AI era, this is directly tied to measuring brand presence with SOM — because visibility is no longer about rankings alone, but about how often your brand appears inside model-generated answers.

- Old Pitch: “We need $5,000 for a whitepaper because it establishes thought leadership.”

- New Pitch: “We need $5,000 for original research because currently, our content is mathematically indistinguishable from our competitors. The search algorithms are pruning our pages because they have a high semantic overlap with existing results. This budget allows us to inject novel data points, lowering our cosine similarity score and triggering the Information Gain boost.”

Case Study: How we helped Real Estate Portal fill knowledge gaps with Unique Content

In the UAE real estate market, more content doesn’t always mean better visibility. A leading real estate portal faced the challenge of knowledge gaps, as users often had questions that listings alone couldn’t answer. To solve this, we, as a third-party content partner, published targeted blogs that ensured every piece provided unique insights that competitors didn’t offer.

The Problem: Redundant or Missing Information

Many property pages listed features and prices but didn’t answer practical questions about buying, renting, or investing. This caused:

- Low engagement and short session durations

- Confusion among buyers and renters

- Limited authority in search results

Our Strategy: Publishing High-Value Blogs

We focused on adding unique data, actionable insights, and local context:

- Hidden Costs of Buying Property in Dubai – Explained fees, commissions, and utilities to help buyers budget realistically.

- How to Register Your Tenancy Contract (Ejari) – Step-by-step guidance reducing renter confusion

- When Can Landlords Increase Rent in Dubai? – Clear rules and practical advice for tenants and landlords

Additional Insights:

- Including market trends, investment analysis, and survey-based data made the content stand out.

- Structured blog content with FAQs, examples, and step-by-step processes improved readability and engagement.

- Linking these blogs to relevant listings and neighborhood guides enhanced internal navigation and authority.

The Result: Engagement and Authority

- Users spent more time on pages with practical insights (average session duration increased by 28%)

- Higher-quality leads and more serious inquiries from investors and renters.

- Blogs became a trusted source for both human users and AI-driven search systems, improving search rankings.

Conclusion

In the age of AI, redundancy is the primary failure mode.

If you cannot mathematically prove that your content adds new information to the corpus, you shouldn’t publish it. The “Information Gain Score” is not just a patent metric; it is the new definition of quality. Stop paying for words. Start paying for new vectors.