Evaluation & Testing The “Proof” Metrics

In the legacy era of digital marketing, “proof” was a soft science. We relied on proxies: rank position, click-through rates, and “dwell time.” But as we move into an era dominated by Large Language Models (LLMs) and Google’s increasingly sophisticated Information Gain patents, these metrics are becoming secondary.

For the modern Content Strategist, the CMO, and the DevOps Engineer, SEO is no longer a creative suggestion—it is a technical deployment. To succeed, we must treat our brand’s digital presence like a software product, subject to rigorous unit testing, adversarial stress, and mathematical auditing. This transition represents a shift from “vibes-based marketing” to Information Engineering.

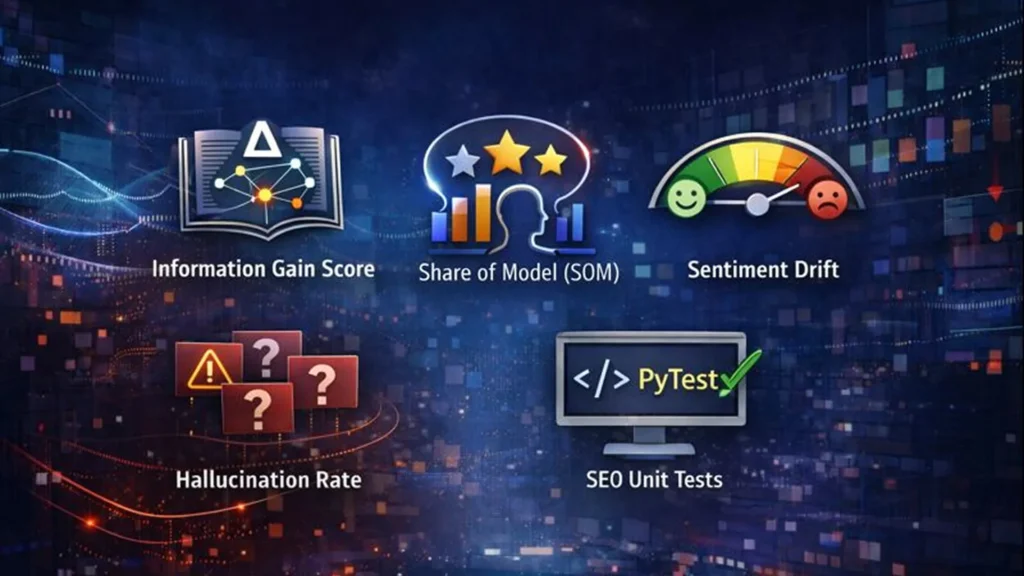

This guide outlines the five “Proof Metrics” required to validate your strategy in an AI-first world.

1. Information Gain Score: Mathematically Auditing

Content Redundancy

Google’s patent for Information Gain (US Patent 11,354,342) changed the game for original research. In a world where AI can generate “average” content for free, Google’s primary goal is to identify and reward the “Delta” the specific piece of information that does not exist in the top 10 search results.

The Mathematical Problem

When a search engine processes a new page, it looks at the Entropy of the information provided. If your article on “The Best CRM Software” contains the same 10 features as the existing top-ranking articles, your Information Gain score is effectively zero. In the eyes of a vector-based index, your content adds no new data vectors; it is redundant noise.

Information Gain (IG) can be simplified as the reduction in uncertainty (H) about a topic (S) after adding a new attribute (A):

IG(S, A) = H(S) – H(S | A)

The “Proof” Metric: The Vector Delta

To audit for this, content teams must move beyond keyword density and toward Information Gain Score: Mathematically Auditing Content Redundancy. By quantifying the uniqueness of your data, you justify the budget for original research and proprietary surveys.

- The Audit: Scrape the top 5 ranking results for your target topic.

- The Test: Use an embedding model to plot the semantic space of those competitors.

The Goal: Your content must occupy a coordinate in the vector space that is currently “empty,” representing a unique case study or proprietary dataset.

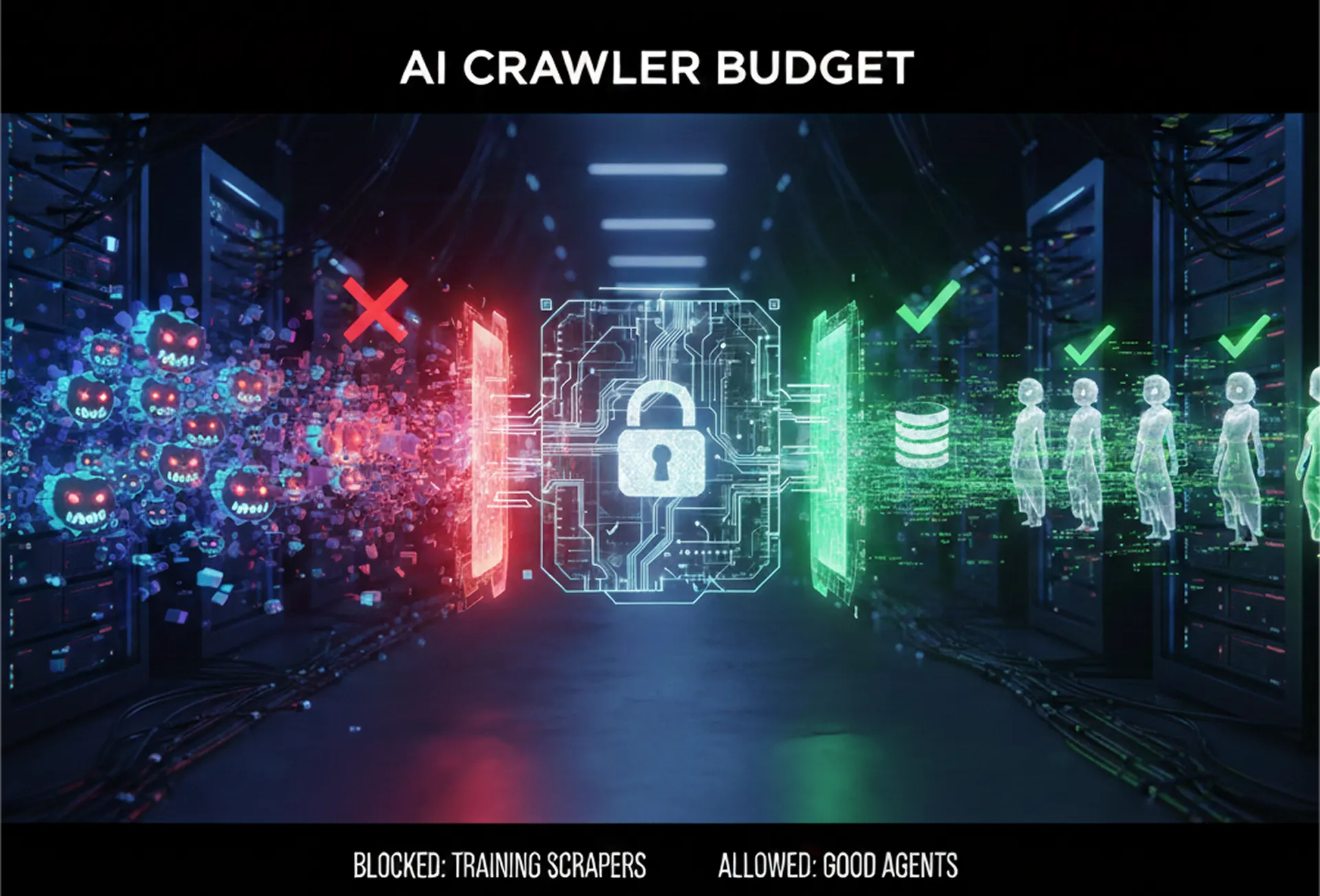

2. Share of Model (SOM): The New Share of Voice

Market share is no longer just about who is bidding on “Cloud Computing.” It is about which brand the LLM retrieves when a user asks, “Who are the most reliable cloud providers for mid-market healthcare?”

Share of Model (SOM): The New Share of Voice measures the frequency and sentiment of your brand’s mentions within the latent space of models like Gemini, GPT-4o, and Claude. As traditional Search Engine Results Pages (SERPs) are replaced by AI Overviews, SOM becomes the only KPI that matters for brand awareness.

The SOM Methodology

To calculate SOM, we move away from “Impressions” and toward Citation Frequency. We recommend a “50-Prompt Audit” consisting of:

- Direct Retrieval: “Name the top 5 [Industry] solutions.”

- Use-Case Specific: “Which software is best for [Specific Task]?”

- Adversarial Comparison: “Why would someone choose [Competitor] over [Your Brand]?”

Calculating SOM

If your brand is mentioned in 15 out of 50 varied prompts within Gemini, your SOM for that specific niche is 30%. By tracking this monthly, a CMO can finally see the “invisible” impact of PR and technical content on the AI’s training data.

3. Sentiment Drift Analysis: Monitoring Brand Perception in AI Answers

AI models are not static; through fine-tuning and updated RAG layers, their “opinion” of your brand can shift. Sentiment Drift Analysis: Monitoring Brand Perception in AI Answers is the practice of monitoring whether an AI’s description of your brand is moving from “Market Leader” to “Legacy Provider” or, worse, “High Risk.”

Automated Drift Tracking

By using Python-based wrappers around LLM APIs, brands can automate the checking of their “Brand Bio.”

- Input: Weekly prompts asking the AI to summarize the brand’s current market position.

- Analysis: Pass the output through a sentiment classifier (e.g., VADER or a fine-tuned GPT-4o evaluator).

- Correlation: Map the sentiment score against recent press activity or negative social media spikes.

If the sentiment drops from a 0.8 (Positive) to a 0.2 (Neutral/Negative) over 30 days, the PR team has a quantifiable lead time to address the underlying content issues before the drift becomes a permanent part of the model’s weightings.

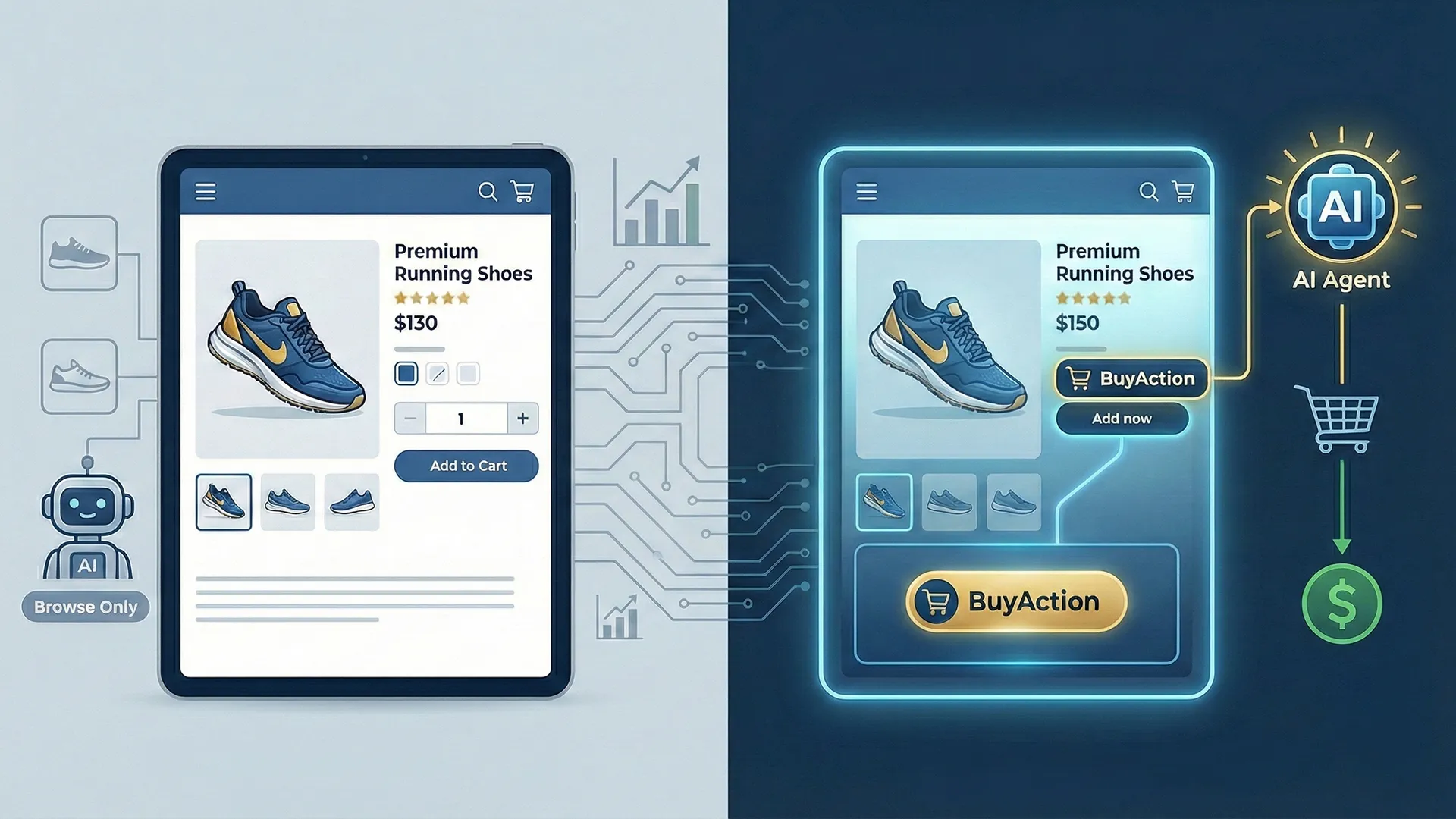

4. Unit Testing for SEO: Using PyTest to Validate Schema Integrity

For years, SEO was treated as an afterthought, something fixed after a site update broke it. In a professional “Proof” environment, we treat Structured Data (Schema) as mission-critical code. If your Schema breaks, the AI’s ability to parse your entities (prices, reviews, leadership) fails immediately.

The Python Workflow: Shifting SEO Left

Instead of manually checking the Google Rich Results Test, developers can implement Unit Testing for SEO: Using PyTest to Validate Schema Integrity directly into the CI/CD pipeline to validate JSON-LD against a “Golden File.”

Python

# Example Snippet: Validating Organization Schema via PyTest

import pytest

import requests

def test_schema_entity_exists():

url = “https://staging.cubitrek.com”

response = requests.get(url)

# Logic to parse JSON-LD from HTML

schema = parse_json_ld(response.text)

assert schema[“@type”] == “Organization”, “Schema type must be Organization”

assert “name” in schema, “Brand Name missing from Schema.”

assert “logo” in schema, “Brand Logo missing from Schema.”

# The build fails if essential data is missing, preventing SEO regression

By treating SEO like software deployment, the DevOps team ensures that no code is shipped that “blinds” the search engine or AI agents to your brand’s metadata.

5. The Hallucination Rate: Stress-Testing Your Brand with Adversarial Prompts

Primary Persona: Risk / Compliance / Security

The final proof of a robust brand strategy is its resilience against “Hallucinations.” If an AI is asked about your product’s pricing and it makes up a number, your content strategy has a Knowledge Gap. This is a “security-first” mindset applied to marketing.

Red Teaming Your Content

“Red Teaming” is the act of intentionally trying to break the AI’s understanding of your brand. The Hallucination Rate: Stress-Testing Your Brand with Adversarial Prompts suggests running adversarial prompts to find weaknesses:

- “Is it true that [Brand] is being acquired by [Competitor]?”

- “Provide a list of known vulnerabilities for [Product].”

The Metric: Hallucination Frequency

If the AI cannot find a definitive, authoritative source on your site to answer a difficult or misleading question, it will “hallucinate.”

- The Proof: A “Hallucination Rate” of <5% across 100 adversarial prompts indicates a high-density, authoritative content moat.

The Action: Every hallucination discovered is a direct instruction for the content team to create a new, authoritative “Source of Truth” page.

Conclusion:

The transition from “Digital Marketing” to “Information Engineering” is non-negotiable. By implementing these five metrics, Information Gain, SOM, Sentiment Drift, Schema Unit Testing, and Hallucination Red Teaming, organisations can finally move past “guessing” if their content works.