Entity Salience Scoring: Auditing Content NLP Confidence

Entity Salience Scoring: Auditing Your Content’s NLP Confidence

In the traditional content workflow, “quality” is a subjective metric. It relies on editorial intuition, readability scores (like Flesch-Kincaid), and brand alignment. But to a Large Language Model (LLM) or a search engine’s indexing algorithm, “quality” is a mathematical vector.

When an AI analyzes your page, it doesn’t “read” it; it parses it for entities and assigns them a Salience Score.

This is the blind spot in modern SEO. You might have the target keyword in your H1, your URL, and sprinkled throughout the text five times. But if the underlying NLP confidence score the Salience is low, the AI views your target topic as a footnote, not the main character.

This article introduces Entity Salience Auditing: a quantitative method to measure whether a machine is as confident in your content’s topic as you are.

The Metric: What is Salience?

In Google’s Cloud Natural Language API, Salience is a score ranging from 0.0 to 1.0. It indicates the importance or centrality of an entity within the entire document text.

- Frequency measures how often a word appears.

- Salience measures how central that word is to the document’s meaning.

The distinction is critical. You can mention “Cloud Computing” ten times in a blog post about “Modern Office Furniture” (e.g., “our smart desks support cloud computing workflows”). The frequency is high, but the salience will be near 0.0 because the semantic weight of the document leans towards furniture.

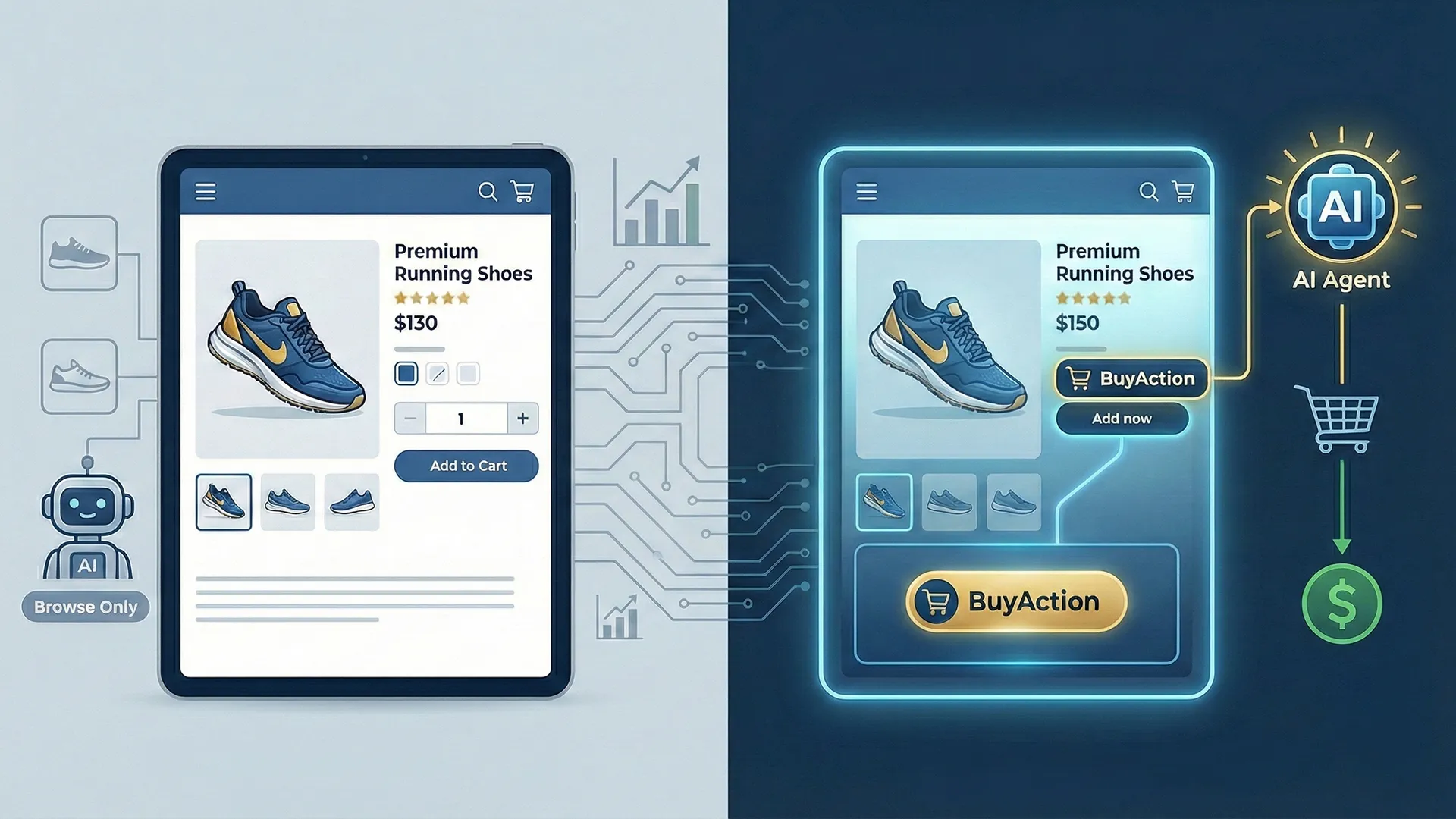

If your page targets “Enterprise ERP,” but the NLP Salience score for that term is 0.04, while “Productivity” scores 0.21, you have a Dilution Problem. The AI interprets your page as being about “Productivity,” with “ERP” merely serving as a supporting detail.

The Engineering Gap: Frequency is not equal to Confidence

Many SEOs operate on the outdated model of Term Frequency-Inverse Document Frequency (TF-IDF). They assume that if they mention the keyword enough times compared to the dataset, relevance is established.

Google’s BERT and subsequent transformers moved past this. They analyze syntax, dependency trees, and sentiment to determine the actual meaning of the text.

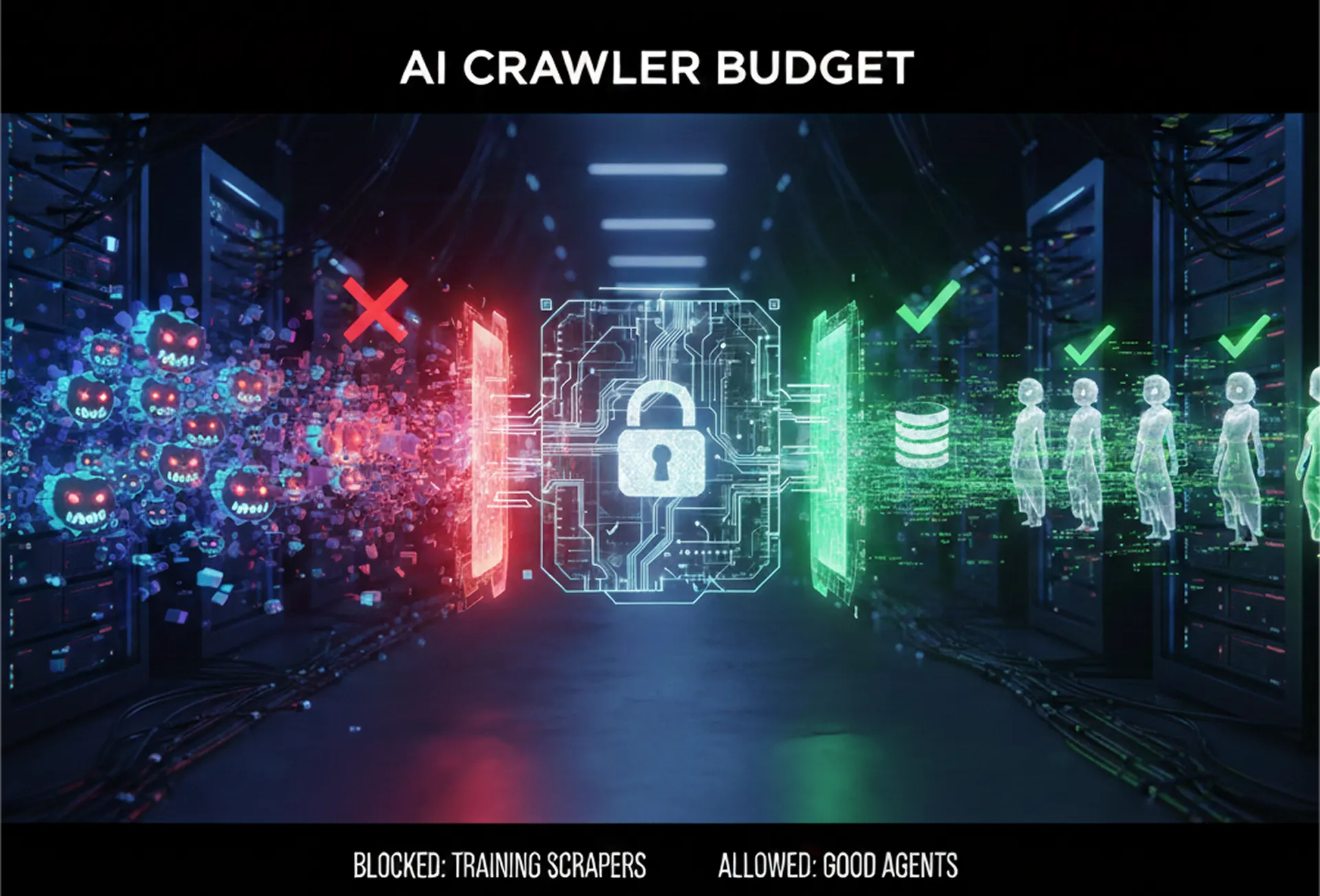

If your content has low salience for its primary target, you are asking the search engine to take a leap of faith. You are relying on backlinks and metadata to do the heavy lifting because the content itself lacks “NLP Confidence”. This is why modern optimization must be engineered around semantic GraphRAG architecture for entity context — where meaning is derived from relationships, not repetition.

The Protocol: How to Audit Salience

To audit this, we move away from standard SEO tools and utilize the Google Cloud Natural Language API.

Step 1: The Setup

You need a text sample from your live page (or draft) and a script to send it to the API.

Step 2: The Script (Python)

For the Data Analyst, here is a Python snippet using the google-cloud-language library to audit a piece of content.This is the same class of NLP system used by Google Search, Gemini, and Knowledge Graph ingestion pipelines to evaluate entity confidence.

At scale, these systems rely on structured Wikidata anchors to ground entity identity and reduce ambiguity — which is why salience scoring is not just a content metric, but a knowledge graph validation signal.

Python

from google.cloud import language_v1

def audit_salience(text_content, target_keyword):

“””

Analyzes the text and returns the salience score of the target keyword

along with the top entity detected by Google NLP.

“””

client = language_v1.LanguageServiceClient()

# Configure the document

document = language_v1.Document(

content=text_content,

type_=language_v1.Document.Type.PLAIN_TEXT

)

# Detect Entities

response = client.analyze_entities(request={‘document’: document})

target_score = 0.0

top_entity_name = “None”

top_entity_score = 0.0

# Iterate through entities to find target and the highest scoring entity

for entity in response.entities:

# Check for the highest scoring entity (The “Topic” according to AI)

if entity.salience > top_entity_score:

top_entity_score = entity.salience

top_entity_name = entity.name

# Check for our Target Keyword

if target_keyword.lower() in entity.name.lower():

target_score = entity.salience

return {

“target_keyword”: target_keyword,

“target_salience”: f”{target_score:.4f}”,

“dominant_topic”: top_entity_name,

“dominant_score”: f”{top_entity_score:.4f}”,

“confidence_gap”: f”{top_entity_score – target_score:.4f}”

}

# Example Usage

text = “””[Insert your full blog post text here]”””

audit = audit_salience(text, “Generative AI”)

print(audit)

Step 3: Interpreting the Data

When you run this audit, you will likely see one of two scenarios:

Scenario A: High Confidence (The Goal)

- Target: “Generative AI”

- Target Salience: 0.45

- Dominant Topic: “Generative AI”

- Result: The code confirms the content is mathematically centered on the target.

Scenario B: The “Footnote” Effect (The Failure)

- Target: “Generative AI”

- Target Salience: 0.02

- Dominant Topic: “Future of Work” (0.38)

- Result: You wrote a piece about the future of work. You mentioned AI, but you did not write about AI. To the algorithm, your target keyword is a footnote.

If your audit reveals a low score (Scenario B), you cannot fix it by simply adding the keyword more times (stuffing). You must manipulate the linguistic structure of the document to signal importance.

- Syntax Prioritization (Subject vs. Object)

NLP algorithms assign higher weight to entities appearing in the Subject position of a sentence rather than the Object position.

- Low Salience: “Companies are seeing benefits from AI.” (AI is the object).

- High Salience: “AI drives benefits for companies.” (AI is the subject).

- The First 10% Rule

Google’s API weighs the first 100 tokens heavily. If your target entity does not appear in the first paragraph, its maximum potential salience score drops significantly. Do not “bury the lead.”

- Dependency Trees & Co-Occurrence

Ensure the verbs associated with your entity are strong and distinct. If your target entity is constantly modifying other nouns (e.g., “AI tools,” “AI software”), the entity “AI” loses salience to the nouns it modifies (“tools,” “softwEngineering Salience: Optimization Logic

are”). Isolate the entity.

Engineering Salience: Optimization Logic

If your audit reveals a low score (Scenario B), you cannot fix it by simply adding the keyword more times (stuffing). You must manipulate the linguistic structure of the document to signal importance.

At an advanced level, this is not just content optimization — it is authority engineering. The same entity confidence principles used for brand disambiguation and Knowledge Graph construction also apply when auditing founder authority via structured entities across AI systems.

Conclusion: Data Over Feeling

We are moving into an era where content optimization is less about “writing well” and more about “disambiguation engineering.”

As a data analyst or content engineer, your job is to audit the gap between human perception and machine reality. By auditing Salience Scores, you stop hoping the AI understands your content, and you start mathematically ensuring it.