Agentic SEO: Optimizing for Agents

In the next 1–2 years, the world of search and ecommerce will shift dramatically: not just humans searching in search bars, but autonomous AI agents executing actions on behalf of users like booking flights, purchasing software, researching vendors, and more. To capitalize on this emerging frontier, traditional SEO must evolve into “Agentic SEO”, engineering your site for AI agents that don’t just scan content, but interact, transact, and automate operations autonomously.

This isn’t speculative. It’s the Next Big Thing, a shift from indexing for humans to integrating with machine workflows. This guide outlines how to prepare your systems, APIs, schema, and infrastructure to lead in this era.

Why Agentic SEO Matters Now

CTOs, product leaders, and infrastructure teams need to understand a simple truth: AI agents don’t “read” HTML like humans; they call APIs and parse structured instructions. If your site only exposes text and UI, you’re invisible to machines that reason programmatically.

The emerging class of bots will interpret meta-search signals not as ranked positions, but as executable capabilities. They ask:

- Can I perform a task here?

- Can I buy, reserve, or transact through this site?

- Can I reliably integrate this endpoint in a workflow?

If the answer is “no,” you miss a trillion-dollar channel of zero-friction conversions before it arrives.

Redefining Ranking: From Keywords to Capabilities

Classic SEO treats ranking as a function of crawl → index → score. But agentic bots add an action layer:

Discovery ⇒ Ability ⇒ Execution

To machine agents:

- Discovery means the schema and APIs are visible and reachable.

- Ability means the product/service can be executed programmatically.

- Execution means clear, documented endpoints that agents can call.

This is the mindset shift: optimize not just for search results but for search-to-action pipelines.

Agents Don’t Read HTML; They Read APIs

Traditional SEO relies on HTML content and structured data tables. Autonomous AI buyers, whether built on GPT extensions, advanced crawlers like GPTBot/CCBot, or future agent platforms, bypass HTML UI and call programmatic interfaces.

What This Means

HTML ≠ Actionable Intelligence

APIs + Schema = Actionable Intelligence

Effective agentic optimization exposes:

- Product metadata via APIs (prices, availability, variants)

- Transactional pathways (add to cart, bookings, digital goods delivery)

- High-fidelity schema with potential actions that bots can interpret natively

Put simply: if your commerce engine doesn’t speak HTTP API, you’re invisible to agents.

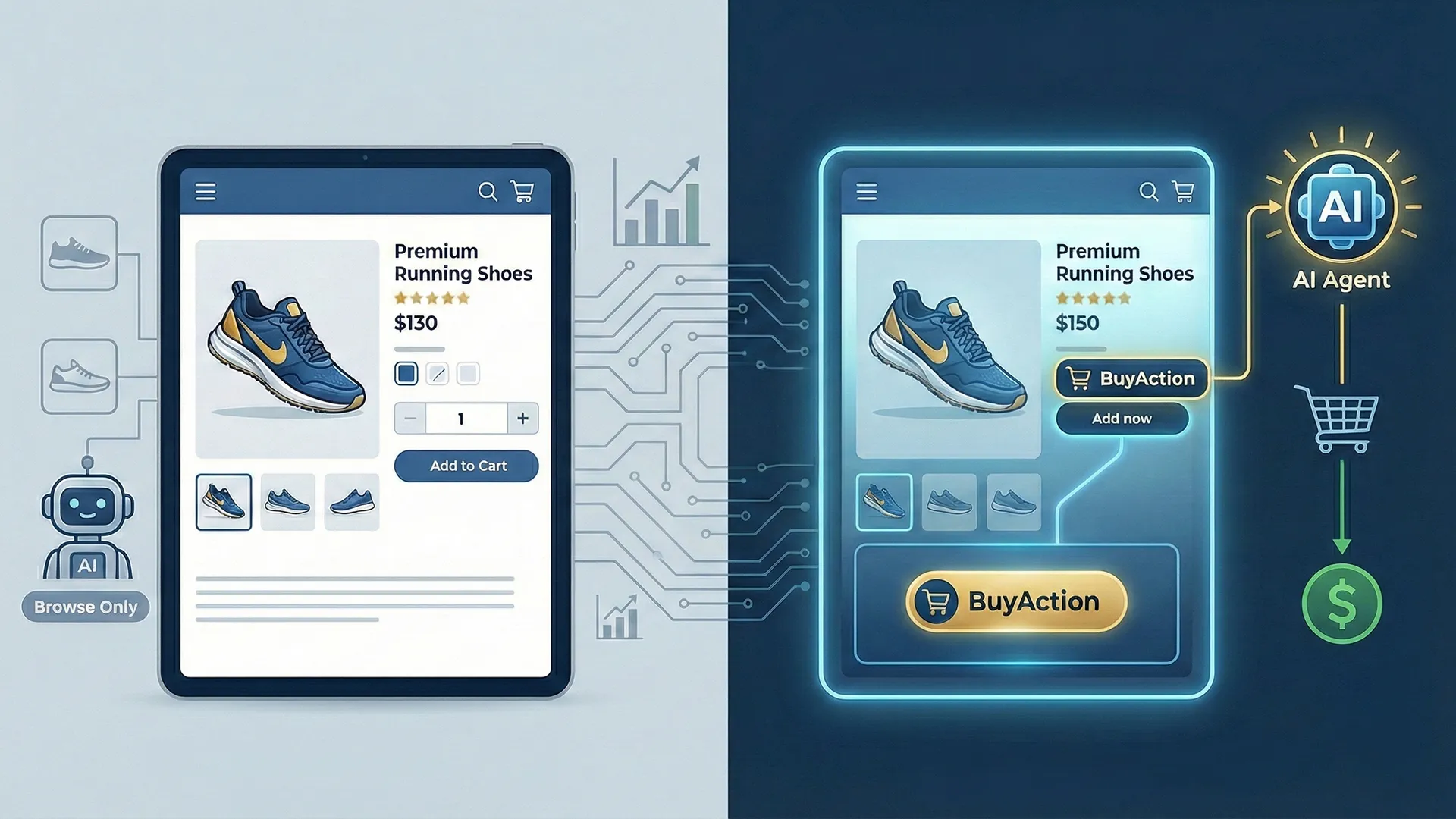

Structured Data for Agents: Action Schema

Structured data has traditionally been used to display rich snippets. But to prepare for agents that execute tasks, structured data must not only describe, but also declare executable actions.

That’s where Schema.org’s Action types come in: specifically BuyAction and ReserveAction. By declaring potential actions, you tell an agent:

“Yes, you can perform this task here.”

This is vastly different from a typical Product schema.

Example: BuyAction

BuyAction tells an AI agent how to initiate a transaction rather than merely describe a product. It bridges discovery and action:

{

“@context”: “https://schema.org”,

“@type”: “Product”,

“name”: “Premium Product”,

“offers”: { … },

“potentialAction”: {

“@type”: “BuyAction”,

“target”: {

“@type”: “EntryPoint”,

“urlTemplate”: “https://example.com/cart?add=SKU&instant=true”

}

}

}

This single declaration signals a clear path from intention to execution, no UI scraping required.

For a deeper dive into actionable schema implementation, see Action Schema: Implementing Potential Action for AI Agents.

Schema Beyond Flat Pages: Nested JSON-LD

As bots become more sophisticated, they’ll parse knowledge graphs and multi-entity relationships, not flat product pages. Nested JSON-LD is the next phase: connecting products, actions, entities, and organizational context into one machine-digestible graph.

This prepares your site for RAG and Graph-augmented retrieval engines that expect relationships, not fragments.

Engineered Discovery: API-First Crawling

Agents like GPTBot or CCBot don’t simulate human clicks; they crawl endpoints. This means your API documentation, OpenAPI specs, authentication flows, and rate limits are ranking signals.

Design your public APIs to:

- Return structured, versioned JSON responses.

- Include hypermedia links (HATEOAS) for discovery.

- Adhere to RESTful or GraphQL conventions that bots understand.

- Use consistent, documented error codes.

For technical teams, this is familiar territory, but SEO teams rarely think in API terms. Agentic SEO unifies these worlds.

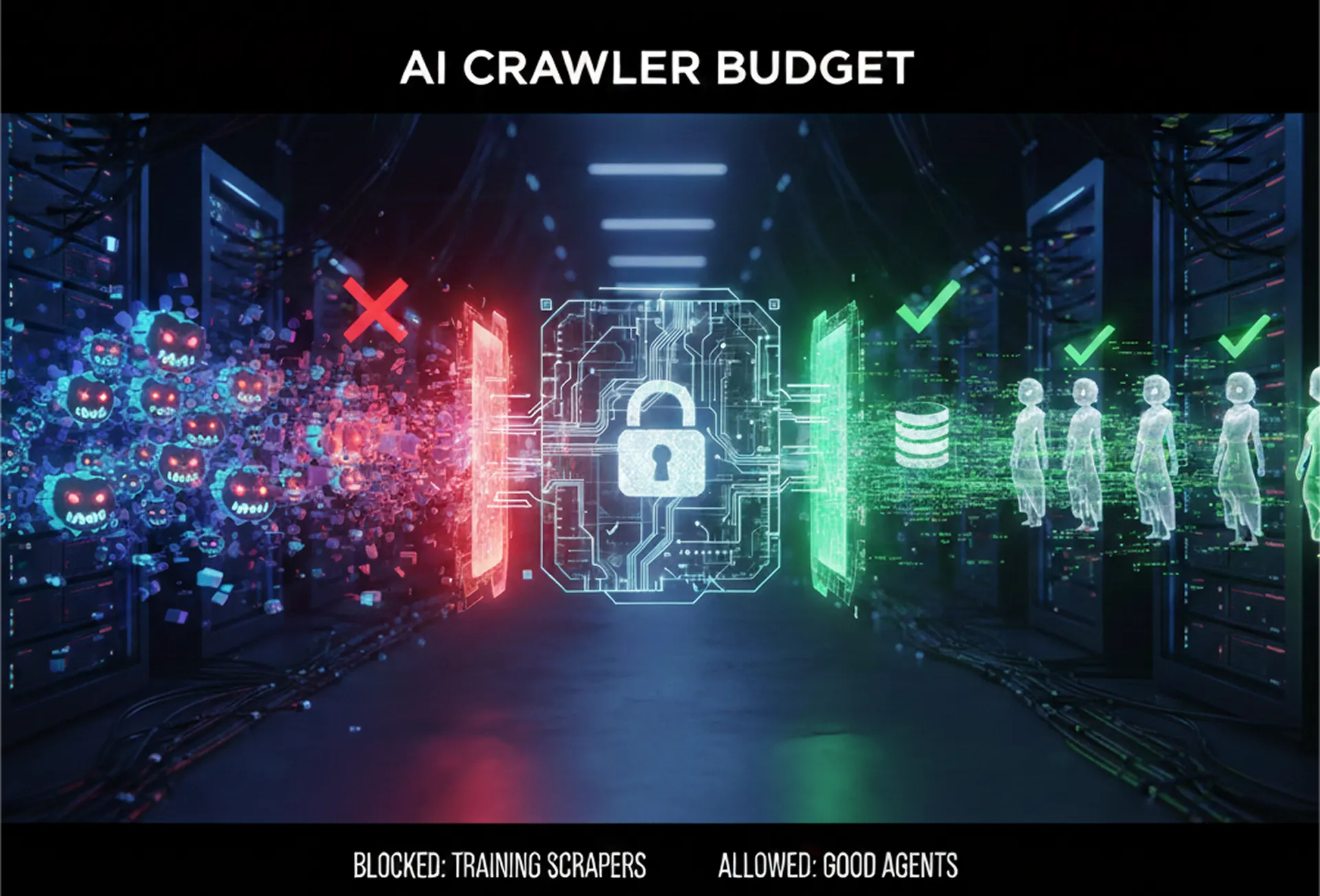

Filtering Good Agents From Scrapers

Opening your API universe to every bot is a bad idea, especially for infrastructure leads concerned about cost.

Key strategies:

- API Key & Authentication Tiers

Only allow deep transactional access to authenticated agents.

- Robots & Agent Fingerprinting

Differentiate between helpful crawlers (GPTBot/Google-Extended) and brute scrapers.

- Rate Limiting & Cost Controls

Throttle anonymous access to preserve server resources.

This balances accessibility with protection against infrastructure overload and DDoS-like crawling. Agents should access what they need, not everything you own.

Infrastructure & Cost Management

Engineering for agents doesn’t stop at schema. It has serious infrastructure implications:

- API Gateways to manage agent traffic

- CDNs + Edge Caching for response delivery

- Cost-based throttling to prevent misuse

- Service monitoring & SLAs for contracted bot integrations

These are architectural concerns for CTOs and platform leads not just SEO teams

Testing & Verification

Automated validation of structured data and APIs must now be part of CI/CD, not an afterthought. Unit tests for JSON-LD compliance, API contract tests, and schema correctness should break builds if malformed. Tools like pytest can help automate schema validation integrated into pipelines.

Ranking Agents: Who Gets Preference?

Not all bots are equal. You want bots that:

- Respect robots policies

- Honor API contracts

- Have documented purposes

Document your APIs using OpenAPI/Swagger with a dedicated robots.txt pointing to your API endpoints to ensure the right agents index the right resources.

Linking Agentic SEO to Existing AI Search Paradigms

Agentic SEO doesn’t replace existing AI-centric strategies like RAG and vector search, it complements them.

- RAG pipelines still rely on high-quality, linked, authoritative content.

- Knowledge graphs benefit from accurate nested JSON-LD.

Together, they position your site to be both understood and acted upon by future AI intermediaries.

Tactical Roadmap for Implementation

Audit your product/service APIs for machine readability

- Audit your product/service APIs for machine readability

- Map endpoints to potential actions using Schema.org Action types

- Implement JSON-LD with BuyAction/ReserveAction semantics

- Update your API docs and expose specs

- Add automated schema/API validation in CI/CD

- Monitor crawlers, bot performance, and infrastructure costs

Much like Generative Engine Optimization (GEO), this isn’t optional; it’s foundational.

Conclusion

Agentic SEO is more than a trend; it is the next paradigm shift in how digital platforms are discovered, interpreted, and transacted with by machines. For product leads, it’s strategic foresight; for ecommerce, it’s direct revenue impact; for infrastructure, it’s efficient, controlled access.

If your systems can be acted upon by autonomous agents, not just indexed, you will own the future channel for discovery and conversion.

Get Expert Help with Agentic & AI-Ready SEO

Need guidance implementing Agentic SEO and preparing your business for the autonomous age? Cubitrek’s expert SEO team can help you engineer your platform for AI visibility, structured action, and machine-driven growth.

Explore professional services at https://cubitrek.com/seo/

Frequently Asked Questions

1- What is Agentic SEO?

Agentic SEO is the practice of optimizing websites and digital assets for AI agents instead of only human users. It focuses on making content, APIs, and actions understandable and executable by Agentic AI, enabling AI agents for SEO to discover, evaluate, and perform tasks like comparisons, purchases, or bookings autonomously, especially important for SEO for AI Overviews.

2- Can ChatGPT do SEO?

ChatGPT can assist with SEO tasks such as keyword research, content creation, schema ideas, and strategy planning using an LLM for SEO, but it does not replace full SEO execution. True SEO still requires technical implementation, data access, and performance tracking beyond what generative AI alone can handle.

3- What is the difference between agent and agentic?

An agent is a single system that performs a task when instructed, while agentic refers to systems designed to act autonomously, make decisions, and complete multi-step workflows. In Agentic AI vs Generative AI, generative AI creates content, whereas agentic AI decides and acts on that content.

4- What are the 4 types of agents?

The four common types of AI agents are:

- Reactive agents – respond to inputs without memory.

- Deliberative agents – plan and reason before acting.

- Learning agents – improve behavior over time.

- Autonomous (Agentic) agents – independently execute goals.

These categories are often referenced when discussing Agentic AI examples used in search, commerce, and automation.

5- How does Agentic SEO relate to tools like Bard or WordLift?

Agentic SEO aligns with platforms such as Bard SEO and tools like WordLift AI SEO Agent, which focus on structured data, semantic understanding, and machine-readable content that AI agents can interpret and act upon.

Have a Brilliant Idea?

1. How do hashtags increase reach?

Connect with the right audience – Hashtags help your posts reach people who are interested in your content.

2. Is it better to use all 30 hashtags?

Instagram lets you use up to 30 hashtags per post, but using too many can lower engagement. It’s better to use a few strong and relevant ones.

3. Do hashtags improve visibility?

Yes, hashtags help more people see your post. They make your content show up for users who don’t follow you yet.