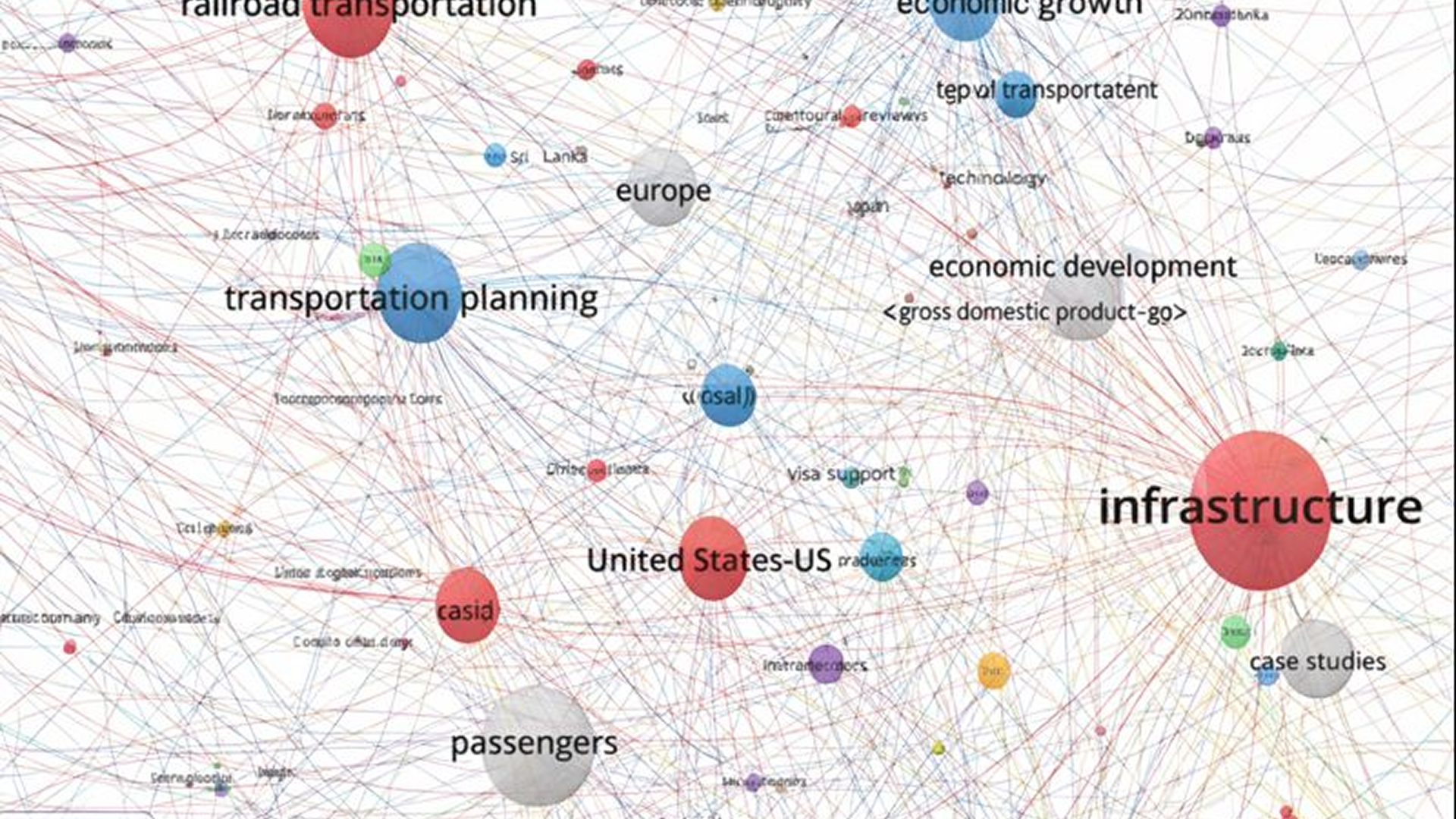

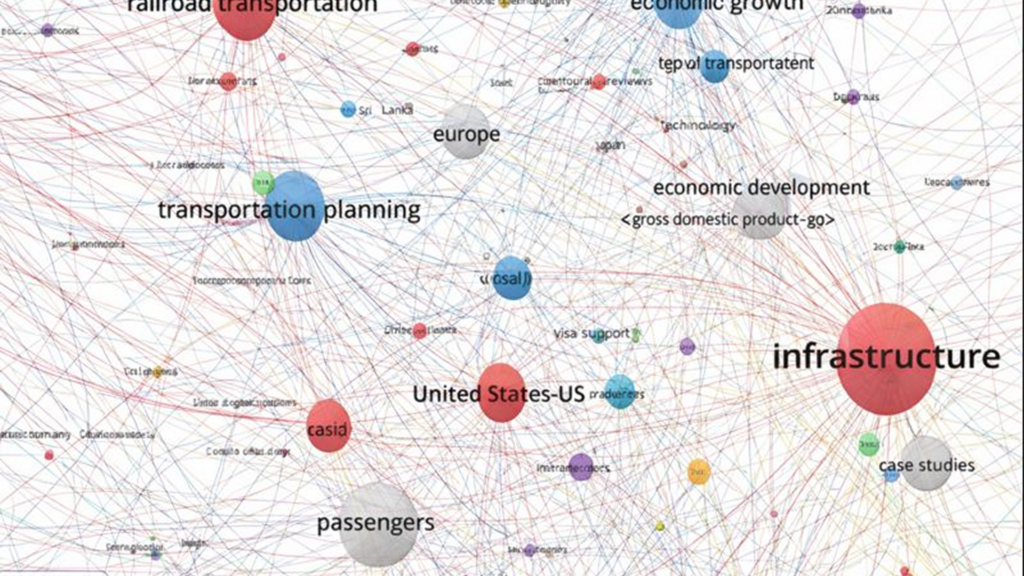

Entity-First Architecture (Knowledge Graph Engineering)

For two decades, digital presence was defined by strings of text. We optimized for “keywords” literal matches typed into a search bar. If you wanted to rank for “enterprise software solutions,” you ensured those three words appeared in your headers and metadata. It was a game of lexical matching. That era is effectively over.

The rise of Large Language Models (LLMs) and generative search engines (like ChatGPT Search, Perplexity, and Google’s AI Overviews) has fundamentally shifted information retrieval from syntactic to semantic. AI does not process strings; it processes concepts. It doesn’t see the keyword “Elon Musk”; it identifies an entity defined by thousands of data points and its relationships to other entities like “Tesla,” “SpaceX,” and “X.”

To control your brand’s narrative in the AI age, you must stop feeding machines keywords and start building a proprietary Knowledge Graph. You must move from “strings” to “things” and the “relationships” that connect them.

This is not merely advanced SEO; it is digital infrastructure engineering designed to ensure your brand is accurately understood, recalled, and referenced by the AI systems now mediating the world’s information.

The Engineering Imperative: Building a Proprietary Graph

If you do not explicitly define your brand entity to an LLM, the model will attempt to define it for you based on probabilistically scraping the open web, often leading to hallucination or conflation with competitors.

The solution is to move beyond basic Schema markup (like throwing a simple “Organization” tag on your homepage) and instead engineer a robust, nested data structure that can feed directly into “GraphRAG” (Graph Retrieval-Augmented Generation) systems.

1. Deep Nesting for Contextual Density

Modern structured data is not a flat list; it is a hierarchy. To establish true authority, you must nest entities within entities to describe the real-world relationships of your business.

An LLM needs to understand that a specific Person is the founder of this specific Organization, which is headquartered in this specific Place. By nesting these entities within your JSON-LD schema, you are providing a pre-validated subgraph that the AI can easily ingest.

Instead of hoping the AI “connects the dots” between your “About Us” page and your CEO’s bio, you are physically drawing the lines for it.

JSON

<script type=”application/ld+json”>

{

“@context”: “https://schema.org”,

“@type”: “Organization”,

“name”: “Apex Innovations”,

“founder”: {

“@type”: “Person”,

“name”: “Jane Doe”,

“jobTitle”: “CEO”,

“knowsAbout”: [“Artificial Intelligence”, “Enterprise SaaS”]

},

“location”: {

“@type”: “Place”,

“address”: {

“@type”: “PostalAddress”,

“addressLocality”: “San Francisco”,

“addressRegion”: “CA”

}

}

}

</script>

This nested structure tells the LLM unambiguously: Jane Doe belongs to Apex Innovations.

2. Borrowing Authority: The Power of Immutable IDs

The strongest signal you can send to an LLM is to anchor your proprietary data to existing, trusted knowledge bases. The gold standard for this is Wikidata.

Wikidata assigns unique identifiers (Q-codes) to entities. For example, Tim Cook is not just the string “Tim Cook”; he is entity Q265852.

If your CEO is notable enough to have a Wikidata entry, or if your company partners with major entities that do, you must explicitly link to those Q-codes in your structured data using the sameAs property.

By doing this, you are essentially telling the AI: “When we mention our CEO, we specifically mean the entity identified as Q265852 in your training data.“ You are borrowing the immense trust and established relationship graph of Wikidata and grafting your brand onto it.

The "Apex" Problem: Engineering Disambiguation

A major pain point for business owners with common brand names (e.g., “Apex,” “Summit,” “Delta”) is identity confusion. When a user prompts an LLM about “Apex Solutions,” the model might conflate data from fifty different companies with similar names.

This is a failure of disambiguation. You must force the AI to distinguish your entity through “citation triangulation.”

You cannot rely on the name alone. You must provide a unique set of digital coordinates using the sameAs property in your schema to link your entity to its specific, verifiable external profiles.

A robust disambiguation strategy looks like this:

- sameAs: [“https://www.linkedin.com/company/apex-innovations-official”]

- sameAs: [“https://www.crunchbase.com/organization/apex-innovations-inc”]

- sameAs: [“https://en.wikipedia.org/wiki/Apex_Innovations_Tech”]

By providing these three distinct authoritative sources, you create a triangulation point that is mathematically unique to your brand. The LLM’s vector embedding for your “Apex” becomes distinct from every other “Apex” because its relationship graph is unique.

Quantifying Relevance: The Science of "Salience"

Data analysts and content strategists often struggle to measure how effectively content communicates a topic to a machine. We know the human read it, but did the AI understand its core focus?

The metric for this is Salience. Using tools like Google’s Natural Language API, we can analyze text to determine how central an entity is to that document. Salience is scored from 0.0 (irrelevant footnote) to 1.0 (the central theme).

If you write a 2,000-word white paper intended to position your brand as a leader in “Supply Chain Logistics,” but the NLP analysis shows your brand entity has a salience score of 0.05, you have failed. The AI views your brand as background noise in that document.

Content engineering involves structuring articles through clear syntax, subject-predicate-object relationships, and entity placement in headers to ensure your target entity achieves a dominant salience score. You are not just mentioning the topic; you are ensuring the mathematical weight of the document revolves around it.

Wiring E-E-A-T Via Knowledge Graph Connections

For Founders and CEOs, personal branding is no longer separate from corporate SEO. Google’s E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) and similar mechanisms in other LLMs rely heavily on understanding who is behind the content.

A standalone “About Us” page is a weak signal. A strong signal is a wired knowledge graph that connects the Founder entity to the Organization entity, and subsequently connects the Founder to proofs of their expertise.

You must technically link a founder’s external validation points—LinkedIn profiles, published interviews on authoritative domains, speaking engagements—directly to the company entity in schema.

JSON

“founder”: {

“@type”: “Person”,

“name”: “Jane Doe”,

“sameAs”: [

“https://www.linkedin.com/in/jane-doe-official”,

“https://www.forbes.com/sites/jane-doe/interview-topic”

],

“alumniOf”: {

“@type”: “Organization”,

“name”: “Stanford University”,

“sameAs”: “https://www.wikidata.org/wiki/Q41506”

}

}

This structure proves expertise. It tells the LLM: “This company is led by this person, who is verified by LinkedIn, has been interviewed by Forbes, and graduated from Stanford (Wikidata Q41506).”

This is how you manufacture authority signals in a way that machines can compute and trust.

Conclusion: Speak the Language of the Machine

The transition from keyword-based search to entity-based AI is not a subtle evolution; it is a complete rewrite of the rules of digital discovery.

LLMs organize the world through relationships. If your brand is not defined as a distinct “thing” with clear, verified relationships to other authoritative “things,” you are leaving your reputation to the mercy of probabilistic guesswork.

By engineering a proprietary knowledge graph, leveraging established IDs, and focusing on semantic clarity, you move from hoping to be found to ensuring you are understood.