The Physics of Retrieval: RAG and Vector Search

In the era of Generative Engine Optimization (GEO), the practice of optimizing content for AI-driven search engines—readability is no longer just about human comprehension. It is about machine ingestion. We are optimizing for Retrieval-Augmented Generation (RAG) pipelines.

For the CTO and the technical SEO lead, this requires a fundamental strategic shift: moving from “keywords” to “tokens,” and from “pages” to “vectors.” If an LLM cannot retrieve your content effectively from a vector database like Pinecone or Weaviate, your content simply does not exist to the AI. This is the physics of retrieval.

Optimizing Recursive Character Text Splitting for RAG Pipelines

Before an AI understands your content, it must ingest it using a process called Recursive Character Text Splitting. This is the mechanism by which RAG pipelines break down long-form text into manageable “chunks” for processing.

A standard splitter typically looks for separators in a specific order: [“\n\n”, “\n”, ” “, “”]. It attempts to maintain semantic integrity by breaking text at the largest separator (double newlines) before resorting to smaller ones.

How Formatting Affects Chunking

If your content lacks proper semantic HTML structure, specifically clean paragraph breaks (<p>) and hierarchy, you disrupt the logical “chunk.”

- The Risk: Misused <br> tags or “walls of text” force the splitter to sever a sentence in the middle.

- The Consequence: If the context (the Setup) is in “Chunk A” and the technical solution (the Payoff) is in “Chunk B,” the retrieval system may fail to pull both, causing the LLM to hallucinate.

Engineering Best Practice: Ensure every paragraph contains a complete thought. Use distinct HTML block elements to force the splitter to respect your content’s boundaries.

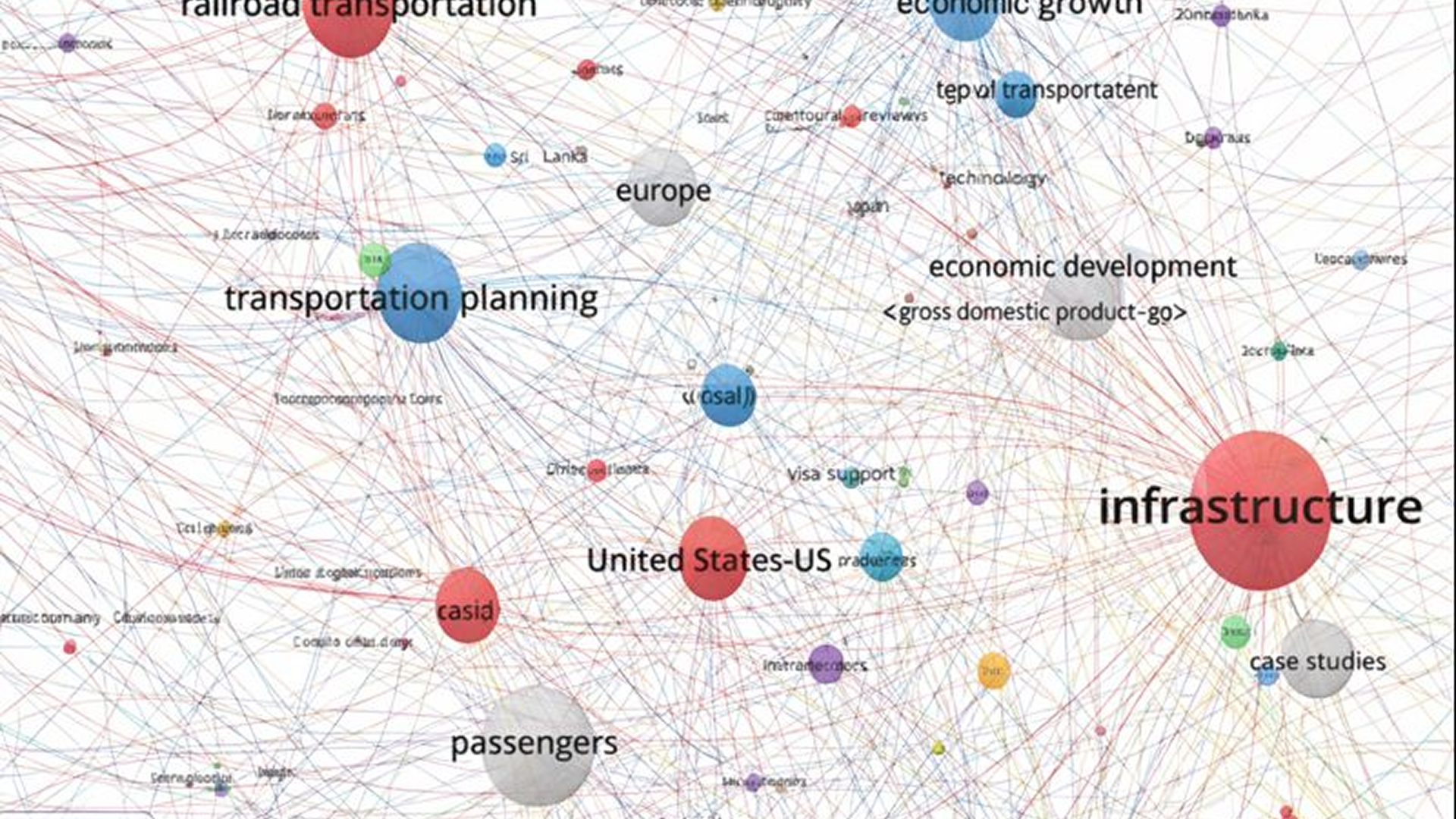

Balancing Sparse BM25 and Dense Vector Retrieval for AI SEO

Modern AI search engines utilize Hybrid Search, a retrieval strategy that combines two distinct algorithms to ensure accuracy. To rank in AI Overviews, you must optimize for both simultaneously.

1. Sparse Retrieval (BM25) optimization

BM25 (Best Match 25) is the evolution of TF-IDF. It relies on exact keyword matching and term frequency. To optimize for this, do not abandon precise technical terminology. If users search for “API latency,” using vague terms like “system slowness” will degrade your BM25 score.

2. Dense Retrieval (Vector) optimization

Dense Retrieval maps text to a high-dimensional vector space to identify semantic similarity, even without keyword matches. To optimize for this, write conceptually rich content that answers the intent of the query, not just the string of words.

Fixing Tokenization Errors for Brand Names in LLMs

Large Language Models (LLMs) do not read words; they read tokens (sub-word units). Standard tokenizers, such as OpenAI’s cl100k_base, are trained on public datasets. While common words like “Apple” are single tokens, unique brand names often break into nonsensical syllables.

The Problem: A niche brand name like “Xylophia” might be tokenized as [Xy, lo, ph, ia]. To the model, these are four unrelated fragments.

The Solution: Semantic Association Training You must manually build the association in your text to fix the vector embedding. Do not simply write “Xylophia increases ROI.” Instead, use a defining appositive:

“Xylophia, an automated revenue intelligence platform, increases ROI.”

By placing the unknown tokens [Xy, lo, ph, ia] immediately next to high-value, known tokens (“revenue intelligence platform”), you influence the vector embedding, effectively “teaching” the model synonymy in real-time.

Reducing Vector Distance with Semantic H2 Anchors

In a vector database, relevance is calculated by distance, often using Cosine Similarity. The goal is to minimize the mathematical distance between the user’s query vector and your content’s chunk vector.

Headings (H2, H3) act as “anchors” for these vectors. When a document is chunked, the header is often included in the metadata to maintain context.

- Weak Header: “Our Solution” (High vector distance from technical queries).

- Optimized Header: “Resolving API Rate Limiting with Exponential Backoff” (Low vector distance from the query “how to fix api rate limiting”).

Actionable Advice: Treat your H2 tags as context injectors. They must explicitly describe the content of the chunk to ensure the vector points to the correct location in the semantic space.

Embedding Multi-Modal Content for CLIP Model Retrieval

The future of search is multi-modal. Models like CLIP (Contrastive Language-Image Pre-training) map images and text into the same vector space. This allows an AI to “retrieve” an image based on a text query, even without alt-text, provided the visual data is clear.

The “Context Wrapper” Technique

To ensure your visual data is retrievable, you must wrap the image in relevant text. Do not rely solely on Alt Text, which acts only as a fallback for Sparse retrieval.

Example of Context Wrapping:

As shown in the chart below, the Q4 Growth Trend indicates a 40% increase in retrieval accuracy when using Hybrid Search compared to Sparse-only methods. [Insert Chart Here] This data confirms that combining BM25 with Vector Embeddings yields superior results.

By explicitly describing the insight before and after the image, you ensure that the image’s vector embedding is tightly coupled with the concepts of “Growth Trend” and “Retrieval Accuracy.”

Conclusion

Optimizing for AI is an engineering challenge. It requires a deep understanding of the pipeline: Input -> Tokenization -> Chunking -> Embedding -> Retrieval. By formatting for splitters and anchoring vectors with strong headers, you ensure your content is machine-readable.

Frequently Asked Questions

1.What type of database is used for embedding in RAG?

RAG pipelines use Vector Databases (e.g., Pinecone, Weaviate, Milvus). Unlike traditional SQL databases that store data in rows and columns for exact matching, vector databases store high-dimensional vectors (mathematical representations). This allows them to calculate “semantic distance” and find content based on meaning rather than just matching keywords.

2.What is the difference between RAG and Vectorization?

The difference is Part vs. Whole.

- Vectorization is just the process of converting text into numbers (embeddings) so a machine can read it.

- RAG (Retrieval-Augmented Generation) is the entire system that uses those numbers to search your data and generate an answer.

Think of it this way: Vectorization is like writing an index card for a book; RAG is the librarian who uses that card to find the book and read the answer to you.