Multi-Modal RAG: The Future of Visual Content Strategy Beyond Text

For years, marketing teams have optimized text for search engines while leaving their visual assets relying on basic alt-tags. Multi-Modal RAG changes this paradigm, allowing AI to directly “see” and interpret the raw data locked inside your charts and infographics. This guide explores how to transform your visual library from mere decoration into a retrievable database, ensuring your brand is discoverable in the era of AI search.

The Invisible Half of Your Content Library

For the past decade, marketing directors have honed precise operations for text optimization. We have SEO teams, keyword strategies, and editorial calendars designed to ensure Google understands every paragraph we publish.

But what about your visual assets?

Think about the investment your organization makes in infographics, complex pricing tables, quarterly report charts, and technical diagrams. Currently, these high-value assets are essentially invisible to search engines. We rely on a crutch—Alt-Text—to describe a complex visual in a single sentence.

If your alt-text says “Chart showing Q3 growth,” that is all the search engine knows. The actual data points, the trend lines, and the nuances locked within those pixels are lost.

The paradigm is shifting. With the arrival of Multi-Modal RAG (Retrieval-Augmented Generation), AI is gaining the ability to “see” and interpret the raw pixels of your visual content. This is not just an accessibility update; it is a fundamental change in how your brand’s information is indexed and retrieved by the next generation of AI search tools.

For marketers, it’s critical to understand tokenization impact on multi-modal embeddings, because how AI tokenizes your visuals directly affects retrieval accuracy and the semantic alignment of your assets.

Here is the strategic imperative for unlocking the data currently trapped in your images.

Beyond Alt-Text: How AI "Sees" Today

To understand the opportunity, we must understand the technological leap.

Until recently, computers processed images primarily through metadata (file names, surrounding text, and alt-tags). They didn’t understand the image; they understood the label we put on the image.

Enter CLIP and Direct Pixel Embedding

Newer AI models used in Multi-Modal systems (like OpenAI’s CLIP or Google’s Gemini Vision) operate differently. They don’t just read the label; they look at the picture.

These models analyze the raw pixels and convert the visual information into a mathematical “fingerprint” (a vector embedding). They have been trained on billions of image-text pairs, learning to associate visual patterns with concepts.

When an AI looks at your pricing page infographic, it doesn’t need alt-text to know it’s a pricing table. It recognizes the structure of columns, rows, currency symbols, and checkmarks. It “embeds” that visual information directly.

The strategic implication: Your visual assets are no longer just decoration to break up walls of text. They are becoming retrievable databases of information.

To maximize retrieval, AI pipelines should be designed for integrating dense and sparse signals in RAG pipelines, ensuring that both visual and textual cues contribute effectively to relevance scoring.

The Challenge: Optimizing "Information Density"

If AI can see images now, why do we need a strategy? Can’t we just upload our charts and be done with it?

No. Because human eyes and AI “eyes” prioritize different things.

Marketing teams often design visuals for aesthetic soft colors, artistic backgrounds, and subtle fonts. While visually pleasing to a human, these design choices often degrade the “machine readability” of the asset.

Furthermore, when AI models process images, they often compress or “downscale” them to save processing power. This is fine for a photo of a product, but catastrophic for data-dense visuals.

The “Blur” Problem in Charts

Imagine a complex B2B architecture diagram. It has fine lines, small text labels, and directional arrows. If an AI model downscales that image to process it, those fine details blur together.

When a potential customer asks an AI, “How does [Your Brand’s] architecture handle data redundancy?”, the AI might retrieve your diagram, but if the pixels are blurry to the model, it cannot read the answer. The retrieval fails, and your competitor whose diagram was optimized for machine vision gets the citation.

Strategic Imperatives for the Marketing Director

To future-proof your content strategy for the Multi-Modal era, creative briefs must evolve. We need to start designing for two audiences: the human customer and the AI retriever.

Here are three strategic shifts to implement:

1. Prioritize “Visual Contrast” Over Aesthetic Subtlety

Your creative team needs to understand that low-contrast text on complex backgrounds is incomprehensible to many AI models.

Directive: Ensure charts use high-contrast color palettes. Axis labels and data points must be crystal clear, sans-serif, and distinct from the background. If an AI crushes the image size down, the data must survive the compression.

2. Rethink Infographic Architecture for “Tiling”

Extremely tall or wide infographics are difficult for AI models to ingest in one bite. They often get resized aggressively, losing all detail.

Directive: Move away from massive, single-file mega-infographics. Instruct design teams to create modular visuals that can be treated as individual “tiles.” This allows RAG systems to ingest the infographic in high-resolution chunks, ensuring every section is readable.

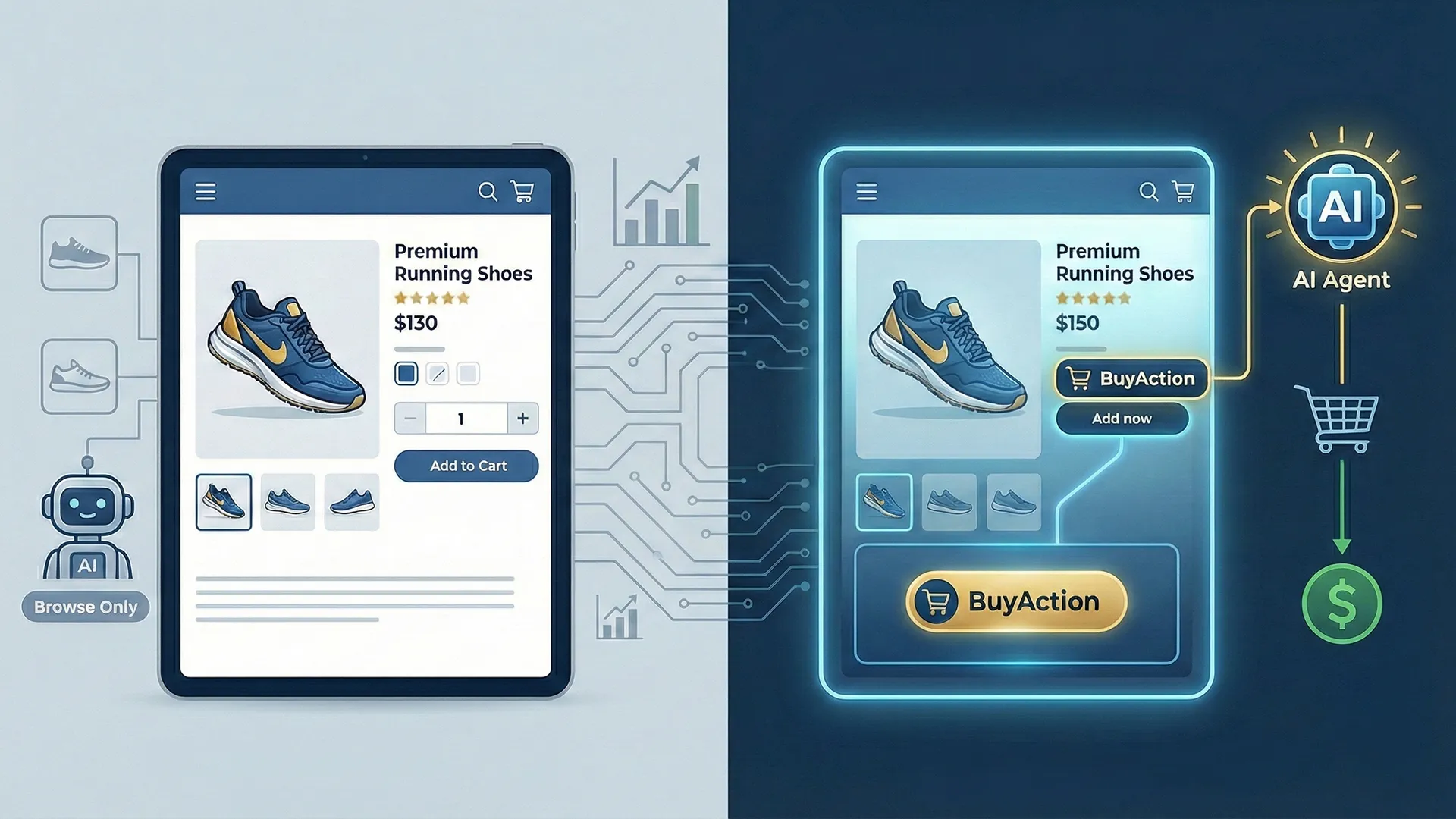

3. The Hybrid “Pixels + Text” Approach

While models are getting better at reading pixels, text remains the ultimate anchor. The goal isn’t to abandon text, but to ensure the visual and the text are perfectly aligned.

Directive: Don’t hide critical data only in the image. If your chart shows a 50% efficiency gain, ensure that statistic is echoed in the H2 or header immediately preceding the image. This creates a “dual-lock” for the AI, where the headers reduce vector distance for AI retrieval — the text anchor confirms the visual data, increasing the confidence of retrieval..

Conclusion: Visuals as Data Assets

The marketing organization of the near future will realize that their library of diagrams, charts, and infographics represents a massive, untapped proprietary dataset.

By shifting from a mindset of “image decoration” to “visual data optimization,” you ensure that when future customers ask complex questions to an AI, your brand has the answers encoded right into the pixels.

Frequently Asked Questions

- What is Multi-Modal RAG?

Multi-Modal RAG (Retrieval-Augmented Generation) is an advanced AI framework that allows a system to retrieve and understand data from multiple “modes” of information, specifically text and images (and increasingly, audio or video) simultaneously.

In a traditional RAG system, if you ask a question, the AI can only look up text documents to find the answer. It is effectively “blind” to any charts or diagrams you have.

In a Multi-Modal RAG system, the AI can “see.” It converts your images into mathematical vectors (just like it does with text). This means if a user asks, “What was the sales trend in Q3?”, the system can retrieve the actual chart image showing the trend, analyze the pixels, and generate an answer based on the visual data.

- What are the key steps in building a Multi-Modal RAG system for Visual Question Answering (VQA)?

Building a system that can answer questions based on images requires four distinct stages:

Step 1: The Vision Encoder (The “Eyes”): You need an embedding model capable of processing images, such as OpenAI’s CLIP, Google’s SigLIP, or Nomic Embed Vision. This model scans your images and converts them into vector embeddings.

Step 2: The Vector Database (The “Memory”): You store these image vectors in a database like Pinecone, Milvus, or Weaviate. Crucially, you must store the image vector alongside its metadata (the “Hybrid Approach” we discussed).

Step 3: Retrieval (The Search): When a user asks a question, the system searches the database for the image vector that is mathematically closest to the question.

Step 4: Generation (The Answer): Once the relevant image is found, it is passed to a Large Vision Model (like GPT-4o or Gemini Pro Vision). This model looks at the retrieved image and the user’s text question together to generate the final answer.